mirror of

https://github.com/portainer/portainer.git

synced 2025-08-04 05:15:25 +02:00

Merge branch 'release/1.20.0'

This commit is contained in:

commit

dbda568481

389 changed files with 11012 additions and 1893 deletions

|

|

@ -77,14 +77,14 @@ The subject contains succinct description of the change:

|

||||||

|

|

||||||

## Contribution process

|

## Contribution process

|

||||||

|

|

||||||

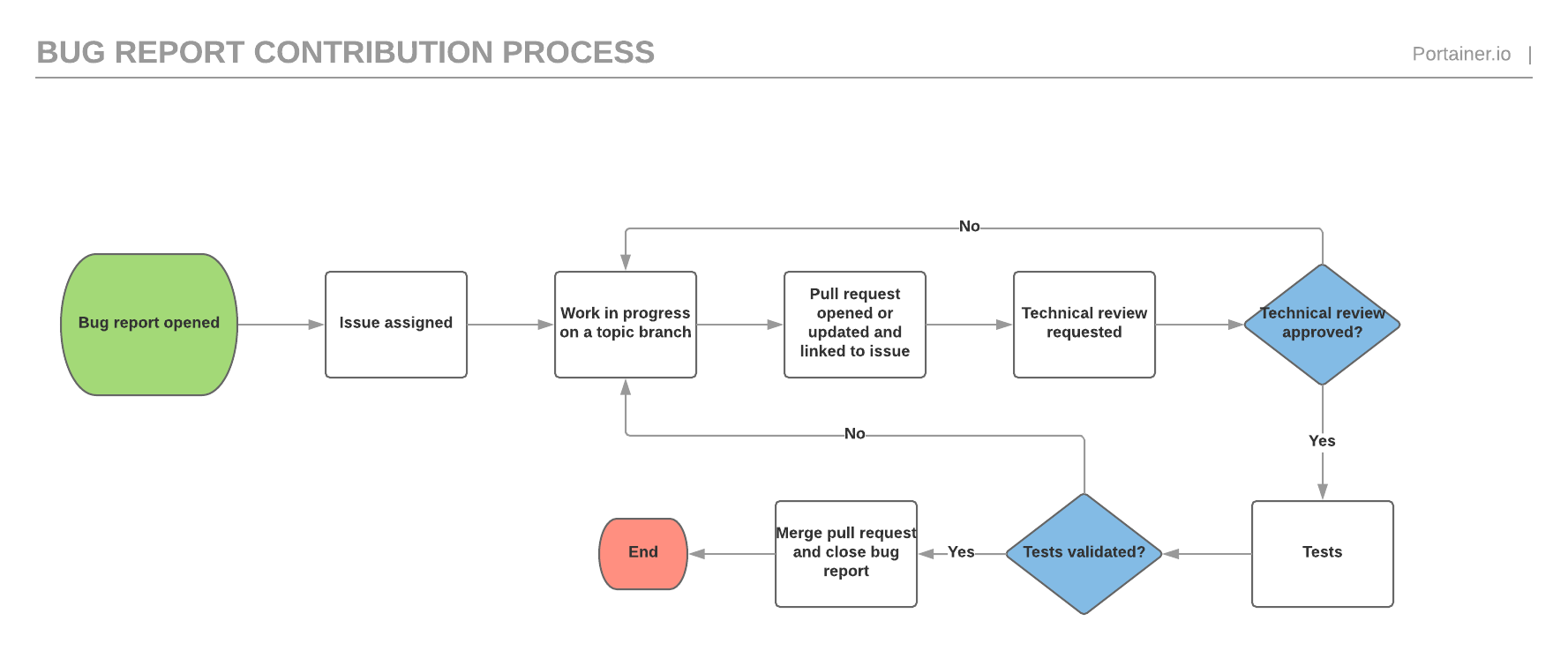

Our contribution process is described below. Some of the steps can be visualized inside Github via specific `contrib/` labels, such as `contrib/func-review-in-progress` or `contrib/tech-review-approved`.

|

Our contribution process is described below. Some of the steps can be visualized inside Github via specific `status/` labels, such as `status/1-functional-review` or `status/2-technical-review`.

|

||||||

|

|

||||||

### Bug report

|

### Bug report

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Feature request

|

### Feature request

|

||||||

|

|

||||||

The feature request process is similar to the bug report process but has an extra functional validation before the technical validation.

|

The feature request process is similar to the bug report process but has an extra functional validation before the technical validation as well as a documentation validation before the testing phase.

|

||||||

|

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -6,7 +6,7 @@

|

||||||

[](https://hub.docker.com/r/portainer/portainer/)

|

[](https://hub.docker.com/r/portainer/portainer/)

|

||||||

[](http://microbadger.com/images/portainer/portainer "Image size")

|

[](http://microbadger.com/images/portainer/portainer "Image size")

|

||||||

[](http://portainer.readthedocs.io/en/stable/?badge=stable)

|

[](http://portainer.readthedocs.io/en/stable/?badge=stable)

|

||||||

[](https://semaphoreci.com/portainer/portainer)

|

[](https://semaphoreci.com/portainer/portainer-ci)

|

||||||

[](https://codeclimate.com/github/portainer/portainer)

|

[](https://codeclimate.com/github/portainer/portainer)

|

||||||

[](https://portainer.io/slack/)

|

[](https://portainer.io/slack/)

|

||||||

[](https://gitter.im/portainer/Lobby?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

|

[](https://gitter.im/portainer/Lobby?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

|

||||||

|

|

|

||||||

|

|

@ -7,13 +7,13 @@ import (

|

||||||

|

|

||||||

// TarFileInBuffer will create a tar archive containing a single file named via fileName and using the content

|

// TarFileInBuffer will create a tar archive containing a single file named via fileName and using the content

|

||||||

// specified in fileContent. Returns the archive as a byte array.

|

// specified in fileContent. Returns the archive as a byte array.

|

||||||

func TarFileInBuffer(fileContent []byte, fileName string) ([]byte, error) {

|

func TarFileInBuffer(fileContent []byte, fileName string, mode int64) ([]byte, error) {

|

||||||

var buffer bytes.Buffer

|

var buffer bytes.Buffer

|

||||||

tarWriter := tar.NewWriter(&buffer)

|

tarWriter := tar.NewWriter(&buffer)

|

||||||

|

|

||||||

header := &tar.Header{

|

header := &tar.Header{

|

||||||

Name: fileName,

|

Name: fileName,

|

||||||

Mode: 0600,

|

Mode: mode,

|

||||||

Size: int64(len(fileContent)),

|

Size: int64(len(fileContent)),

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

48

api/archive/zip.go

Normal file

48

api/archive/zip.go

Normal file

|

|

@ -0,0 +1,48 @@

|

||||||

|

package archive

|

||||||

|

|

||||||

|

import (

|

||||||

|

"archive/zip"

|

||||||

|

"bytes"

|

||||||

|

"io"

|

||||||

|

"io/ioutil"

|

||||||

|

"os"

|

||||||

|

"path/filepath"

|

||||||

|

)

|

||||||

|

|

||||||

|

// UnzipArchive will unzip an archive from bytes into the dest destination folder on disk

|

||||||

|

func UnzipArchive(archiveData []byte, dest string) error {

|

||||||

|

zipReader, err := zip.NewReader(bytes.NewReader(archiveData), int64(len(archiveData)))

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

for _, zipFile := range zipReader.File {

|

||||||

|

|

||||||

|

f, err := zipFile.Open()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

defer f.Close()

|

||||||

|

|

||||||

|

data, err := ioutil.ReadAll(f)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

fpath := filepath.Join(dest, zipFile.Name)

|

||||||

|

|

||||||

|

outFile, err := os.OpenFile(fpath, os.O_WRONLY|os.O_CREATE|os.O_TRUNC, zipFile.Mode())

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

_, err = io.Copy(outFile, bytes.NewReader(data))

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

outFile.Close()

|

||||||

|

}

|

||||||

|

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

@ -10,9 +10,11 @@ import (

|

||||||

"github.com/portainer/portainer/bolt/dockerhub"

|

"github.com/portainer/portainer/bolt/dockerhub"

|

||||||

"github.com/portainer/portainer/bolt/endpoint"

|

"github.com/portainer/portainer/bolt/endpoint"

|

||||||

"github.com/portainer/portainer/bolt/endpointgroup"

|

"github.com/portainer/portainer/bolt/endpointgroup"

|

||||||

|

"github.com/portainer/portainer/bolt/extension"

|

||||||

"github.com/portainer/portainer/bolt/migrator"

|

"github.com/portainer/portainer/bolt/migrator"

|

||||||

"github.com/portainer/portainer/bolt/registry"

|

"github.com/portainer/portainer/bolt/registry"

|

||||||

"github.com/portainer/portainer/bolt/resourcecontrol"

|

"github.com/portainer/portainer/bolt/resourcecontrol"

|

||||||

|

"github.com/portainer/portainer/bolt/schedule"

|

||||||

"github.com/portainer/portainer/bolt/settings"

|

"github.com/portainer/portainer/bolt/settings"

|

||||||

"github.com/portainer/portainer/bolt/stack"

|

"github.com/portainer/portainer/bolt/stack"

|

||||||

"github.com/portainer/portainer/bolt/tag"

|

"github.com/portainer/portainer/bolt/tag"

|

||||||

|

|

@ -38,6 +40,7 @@ type Store struct {

|

||||||

DockerHubService *dockerhub.Service

|

DockerHubService *dockerhub.Service

|

||||||

EndpointGroupService *endpointgroup.Service

|

EndpointGroupService *endpointgroup.Service

|

||||||

EndpointService *endpoint.Service

|

EndpointService *endpoint.Service

|

||||||

|

ExtensionService *extension.Service

|

||||||

RegistryService *registry.Service

|

RegistryService *registry.Service

|

||||||

ResourceControlService *resourcecontrol.Service

|

ResourceControlService *resourcecontrol.Service

|

||||||

SettingsService *settings.Service

|

SettingsService *settings.Service

|

||||||

|

|

@ -49,6 +52,7 @@ type Store struct {

|

||||||

UserService *user.Service

|

UserService *user.Service

|

||||||

VersionService *version.Service

|

VersionService *version.Service

|

||||||

WebhookService *webhook.Service

|

WebhookService *webhook.Service

|

||||||

|

ScheduleService *schedule.Service

|

||||||

}

|

}

|

||||||

|

|

||||||

// NewStore initializes a new Store and the associated services

|

// NewStore initializes a new Store and the associated services

|

||||||

|

|

@ -138,6 +142,7 @@ func (store *Store) MigrateData() error {

|

||||||

ResourceControlService: store.ResourceControlService,

|

ResourceControlService: store.ResourceControlService,

|

||||||

SettingsService: store.SettingsService,

|

SettingsService: store.SettingsService,

|

||||||

StackService: store.StackService,

|

StackService: store.StackService,

|

||||||

|

TemplateService: store.TemplateService,

|

||||||

UserService: store.UserService,

|

UserService: store.UserService,

|

||||||

VersionService: store.VersionService,

|

VersionService: store.VersionService,

|

||||||

FileService: store.fileService,

|

FileService: store.fileService,

|

||||||

|

|

@ -174,6 +179,12 @@ func (store *Store) initServices() error {

|

||||||

}

|

}

|

||||||

store.EndpointService = endpointService

|

store.EndpointService = endpointService

|

||||||

|

|

||||||

|

extensionService, err := extension.NewService(store.db)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

store.ExtensionService = extensionService

|

||||||

|

|

||||||

registryService, err := registry.NewService(store.db)

|

registryService, err := registry.NewService(store.db)

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return err

|

return err

|

||||||

|

|

@ -240,5 +251,11 @@ func (store *Store) initServices() error {

|

||||||

}

|

}

|

||||||

store.WebhookService = webhookService

|

store.WebhookService = webhookService

|

||||||

|

|

||||||

|

scheduleService, err := schedule.NewService(store.db)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

store.ScheduleService = scheduleService

|

||||||

|

|

||||||

return nil

|

return nil

|

||||||

}

|

}

|

||||||

|

|

|

||||||

86

api/bolt/extension/extension.go

Normal file

86

api/bolt/extension/extension.go

Normal file

|

|

@ -0,0 +1,86 @@

|

||||||

|

package extension

|

||||||

|

|

||||||

|

import (

|

||||||

|

"github.com/portainer/portainer"

|

||||||

|

"github.com/portainer/portainer/bolt/internal"

|

||||||

|

|

||||||

|

"github.com/boltdb/bolt"

|

||||||

|

)

|

||||||

|

|

||||||

|

const (

|

||||||

|

// BucketName represents the name of the bucket where this service stores data.

|

||||||

|

BucketName = "extension"

|

||||||

|

)

|

||||||

|

|

||||||

|

// Service represents a service for managing endpoint data.

|

||||||

|

type Service struct {

|

||||||

|

db *bolt.DB

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewService creates a new instance of a service.

|

||||||

|

func NewService(db *bolt.DB) (*Service, error) {

|

||||||

|

err := internal.CreateBucket(db, BucketName)

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

|

||||||

|

return &Service{

|

||||||

|

db: db,

|

||||||

|

}, nil

|

||||||

|

}

|

||||||

|

|

||||||

|

// Extension returns a extension by ID

|

||||||

|

func (service *Service) Extension(ID portainer.ExtensionID) (*portainer.Extension, error) {

|

||||||

|

var extension portainer.Extension

|

||||||

|

identifier := internal.Itob(int(ID))

|

||||||

|

|

||||||

|

err := internal.GetObject(service.db, BucketName, identifier, &extension)

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

|

||||||

|

return &extension, nil

|

||||||

|

}

|

||||||

|

|

||||||

|

// Extensions return an array containing all the extensions.

|

||||||

|

func (service *Service) Extensions() ([]portainer.Extension, error) {

|

||||||

|

var extensions = make([]portainer.Extension, 0)

|

||||||

|

|

||||||

|

err := service.db.View(func(tx *bolt.Tx) error {

|

||||||

|

bucket := tx.Bucket([]byte(BucketName))

|

||||||

|

|

||||||

|

cursor := bucket.Cursor()

|

||||||

|

for k, v := cursor.First(); k != nil; k, v = cursor.Next() {

|

||||||

|

var extension portainer.Extension

|

||||||

|

err := internal.UnmarshalObject(v, &extension)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

extensions = append(extensions, extension)

|

||||||

|

}

|

||||||

|

|

||||||

|

return nil

|

||||||

|

})

|

||||||

|

|

||||||

|

return extensions, err

|

||||||

|

}

|

||||||

|

|

||||||

|

// Persist persists a extension inside the database.

|

||||||

|

func (service *Service) Persist(extension *portainer.Extension) error {

|

||||||

|

return service.db.Update(func(tx *bolt.Tx) error {

|

||||||

|

bucket := tx.Bucket([]byte(BucketName))

|

||||||

|

|

||||||

|

data, err := internal.MarshalObject(extension)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

return bucket.Put(internal.Itob(int(extension.ID)), data)

|

||||||

|

})

|

||||||

|

}

|

||||||

|

|

||||||

|

// DeleteExtension deletes a Extension.

|

||||||

|

func (service *Service) DeleteExtension(ID portainer.ExtensionID) error {

|

||||||

|

identifier := internal.Itob(int(ID))

|

||||||

|

return internal.DeleteObject(service.db, BucketName, identifier)

|

||||||

|

}

|

||||||

35

api/bolt/migrator/migrate_dbversion14.go

Normal file

35

api/bolt/migrator/migrate_dbversion14.go

Normal file

|

|

@ -0,0 +1,35 @@

|

||||||

|

package migrator

|

||||||

|

|

||||||

|

import (

|

||||||

|

"strings"

|

||||||

|

|

||||||

|

"github.com/portainer/portainer"

|

||||||

|

)

|

||||||

|

|

||||||

|

func (m *Migrator) updateSettingsToDBVersion15() error {

|

||||||

|

legacySettings, err := m.settingsService.Settings()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

legacySettings.EnableHostManagementFeatures = false

|

||||||

|

return m.settingsService.UpdateSettings(legacySettings)

|

||||||

|

}

|

||||||

|

|

||||||

|

func (m *Migrator) updateTemplatesToVersion15() error {

|

||||||

|

legacyTemplates, err := m.templateService.Templates()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

for _, template := range legacyTemplates {

|

||||||

|

template.Logo = strings.Replace(template.Logo, "https://portainer.io/images", portainer.AssetsServerURL, -1)

|

||||||

|

|

||||||

|

err = m.templateService.UpdateTemplate(template.ID, &template)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

@ -8,6 +8,7 @@ import (

|

||||||

"github.com/portainer/portainer/bolt/resourcecontrol"

|

"github.com/portainer/portainer/bolt/resourcecontrol"

|

||||||

"github.com/portainer/portainer/bolt/settings"

|

"github.com/portainer/portainer/bolt/settings"

|

||||||

"github.com/portainer/portainer/bolt/stack"

|

"github.com/portainer/portainer/bolt/stack"

|

||||||

|

"github.com/portainer/portainer/bolt/template"

|

||||||

"github.com/portainer/portainer/bolt/user"

|

"github.com/portainer/portainer/bolt/user"

|

||||||

"github.com/portainer/portainer/bolt/version"

|

"github.com/portainer/portainer/bolt/version"

|

||||||

)

|

)

|

||||||

|

|

@ -22,6 +23,7 @@ type (

|

||||||

resourceControlService *resourcecontrol.Service

|

resourceControlService *resourcecontrol.Service

|

||||||

settingsService *settings.Service

|

settingsService *settings.Service

|

||||||

stackService *stack.Service

|

stackService *stack.Service

|

||||||

|

templateService *template.Service

|

||||||

userService *user.Service

|

userService *user.Service

|

||||||

versionService *version.Service

|

versionService *version.Service

|

||||||

fileService portainer.FileService

|

fileService portainer.FileService

|

||||||

|

|

@ -36,6 +38,7 @@ type (

|

||||||

ResourceControlService *resourcecontrol.Service

|

ResourceControlService *resourcecontrol.Service

|

||||||

SettingsService *settings.Service

|

SettingsService *settings.Service

|

||||||

StackService *stack.Service

|

StackService *stack.Service

|

||||||

|

TemplateService *template.Service

|

||||||

UserService *user.Service

|

UserService *user.Service

|

||||||

VersionService *version.Service

|

VersionService *version.Service

|

||||||

FileService portainer.FileService

|

FileService portainer.FileService

|

||||||

|

|

@ -51,6 +54,7 @@ func NewMigrator(parameters *Parameters) *Migrator {

|

||||||

endpointService: parameters.EndpointService,

|

endpointService: parameters.EndpointService,

|

||||||

resourceControlService: parameters.ResourceControlService,

|

resourceControlService: parameters.ResourceControlService,

|

||||||

settingsService: parameters.SettingsService,

|

settingsService: parameters.SettingsService,

|

||||||

|

templateService: parameters.TemplateService,

|

||||||

stackService: parameters.StackService,

|

stackService: parameters.StackService,

|

||||||

userService: parameters.UserService,

|

userService: parameters.UserService,

|

||||||

versionService: parameters.VersionService,

|

versionService: parameters.VersionService,

|

||||||

|

|

@ -186,5 +190,18 @@ func (m *Migrator) Migrate() error {

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// Portainer 1.20.0

|

||||||

|

if m.currentDBVersion < 15 {

|

||||||

|

err := m.updateSettingsToDBVersion15()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

err = m.updateTemplatesToVersion15()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

return m.versionService.StoreDBVersion(portainer.DBVersion)

|

return m.versionService.StoreDBVersion(portainer.DBVersion)

|

||||||

}

|

}

|

||||||

|

|

|

||||||

129

api/bolt/schedule/schedule.go

Normal file

129

api/bolt/schedule/schedule.go

Normal file

|

|

@ -0,0 +1,129 @@

|

||||||

|

package schedule

|

||||||

|

|

||||||

|

import (

|

||||||

|

"github.com/portainer/portainer"

|

||||||

|

"github.com/portainer/portainer/bolt/internal"

|

||||||

|

|

||||||

|

"github.com/boltdb/bolt"

|

||||||

|

)

|

||||||

|

|

||||||

|

const (

|

||||||

|

// BucketName represents the name of the bucket where this service stores data.

|

||||||

|

BucketName = "schedules"

|

||||||

|

)

|

||||||

|

|

||||||

|

// Service represents a service for managing schedule data.

|

||||||

|

type Service struct {

|

||||||

|

db *bolt.DB

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewService creates a new instance of a service.

|

||||||

|

func NewService(db *bolt.DB) (*Service, error) {

|

||||||

|

err := internal.CreateBucket(db, BucketName)

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

|

||||||

|

return &Service{

|

||||||

|

db: db,

|

||||||

|

}, nil

|

||||||

|

}

|

||||||

|

|

||||||

|

// Schedule returns a schedule by ID.

|

||||||

|

func (service *Service) Schedule(ID portainer.ScheduleID) (*portainer.Schedule, error) {

|

||||||

|

var schedule portainer.Schedule

|

||||||

|

identifier := internal.Itob(int(ID))

|

||||||

|

|

||||||

|

err := internal.GetObject(service.db, BucketName, identifier, &schedule)

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

|

||||||

|

return &schedule, nil

|

||||||

|

}

|

||||||

|

|

||||||

|

// UpdateSchedule updates a schedule.

|

||||||

|

func (service *Service) UpdateSchedule(ID portainer.ScheduleID, schedule *portainer.Schedule) error {

|

||||||

|

identifier := internal.Itob(int(ID))

|

||||||

|

return internal.UpdateObject(service.db, BucketName, identifier, schedule)

|

||||||

|

}

|

||||||

|

|

||||||

|

// DeleteSchedule deletes a schedule.

|

||||||

|

func (service *Service) DeleteSchedule(ID portainer.ScheduleID) error {

|

||||||

|

identifier := internal.Itob(int(ID))

|

||||||

|

return internal.DeleteObject(service.db, BucketName, identifier)

|

||||||

|

}

|

||||||

|

|

||||||

|

// Schedules return a array containing all the schedules.

|

||||||

|

func (service *Service) Schedules() ([]portainer.Schedule, error) {

|

||||||

|

var schedules = make([]portainer.Schedule, 0)

|

||||||

|

|

||||||

|

err := service.db.View(func(tx *bolt.Tx) error {

|

||||||

|

bucket := tx.Bucket([]byte(BucketName))

|

||||||

|

|

||||||

|

cursor := bucket.Cursor()

|

||||||

|

for k, v := cursor.First(); k != nil; k, v = cursor.Next() {

|

||||||

|

var schedule portainer.Schedule

|

||||||

|

err := internal.UnmarshalObject(v, &schedule)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

schedules = append(schedules, schedule)

|

||||||

|

}

|

||||||

|

|

||||||

|

return nil

|

||||||

|

})

|

||||||

|

|

||||||

|

return schedules, err

|

||||||

|

}

|

||||||

|

|

||||||

|

// SchedulesByJobType return a array containing all the schedules

|

||||||

|

// with the specified JobType.

|

||||||

|

func (service *Service) SchedulesByJobType(jobType portainer.JobType) ([]portainer.Schedule, error) {

|

||||||

|

var schedules = make([]portainer.Schedule, 0)

|

||||||

|

|

||||||

|

err := service.db.View(func(tx *bolt.Tx) error {

|

||||||

|

bucket := tx.Bucket([]byte(BucketName))

|

||||||

|

|

||||||

|

cursor := bucket.Cursor()

|

||||||

|

for k, v := cursor.First(); k != nil; k, v = cursor.Next() {

|

||||||

|

var schedule portainer.Schedule

|

||||||

|

err := internal.UnmarshalObject(v, &schedule)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

if schedule.JobType == jobType {

|

||||||

|

schedules = append(schedules, schedule)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

return nil

|

||||||

|

})

|

||||||

|

|

||||||

|

return schedules, err

|

||||||

|

}

|

||||||

|

|

||||||

|

// CreateSchedule assign an ID to a new schedule and saves it.

|

||||||

|

func (service *Service) CreateSchedule(schedule *portainer.Schedule) error {

|

||||||

|

return service.db.Update(func(tx *bolt.Tx) error {

|

||||||

|

bucket := tx.Bucket([]byte(BucketName))

|

||||||

|

|

||||||

|

// We manually manage sequences for schedules

|

||||||

|

err := bucket.SetSequence(uint64(schedule.ID))

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

data, err := internal.MarshalObject(schedule)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

return bucket.Put(internal.Itob(int(schedule.ID)), data)

|

||||||

|

})

|

||||||

|

}

|

||||||

|

|

||||||

|

// GetNextIdentifier returns the next identifier for a schedule.

|

||||||

|

func (service *Service) GetNextIdentifier() int {

|

||||||

|

return internal.GetNextIdentifier(service.db, BucketName)

|

||||||

|

}

|

||||||

|

|

@ -2,7 +2,9 @@ package main // import "github.com/portainer/portainer"

|

||||||

|

|

||||||

import (

|

import (

|

||||||

"encoding/json"

|

"encoding/json"

|

||||||

|

"os"

|

||||||

"strings"

|

"strings"

|

||||||

|

"time"

|

||||||

|

|

||||||

"github.com/portainer/portainer"

|

"github.com/portainer/portainer"

|

||||||

"github.com/portainer/portainer/bolt"

|

"github.com/portainer/portainer/bolt"

|

||||||

|

|

@ -87,7 +89,7 @@ func initJWTService(authenticationEnabled bool) portainer.JWTService {

|

||||||

}

|

}

|

||||||

|

|

||||||

func initDigitalSignatureService() portainer.DigitalSignatureService {

|

func initDigitalSignatureService() portainer.DigitalSignatureService {

|

||||||

return &crypto.ECDSAService{}

|

return crypto.NewECDSAService(os.Getenv("AGENT_SECRET"))

|

||||||

}

|

}

|

||||||

|

|

||||||

func initCryptoService() portainer.CryptoService {

|

func initCryptoService() portainer.CryptoService {

|

||||||

|

|

@ -110,25 +112,110 @@ func initSnapshotter(clientFactory *docker.ClientFactory) portainer.Snapshotter

|

||||||

return docker.NewSnapshotter(clientFactory)

|

return docker.NewSnapshotter(clientFactory)

|

||||||

}

|

}

|

||||||

|

|

||||||

func initJobScheduler(endpointService portainer.EndpointService, snapshotter portainer.Snapshotter, flags *portainer.CLIFlags) (portainer.JobScheduler, error) {

|

func initJobScheduler() portainer.JobScheduler {

|

||||||

jobScheduler := cron.NewJobScheduler(endpointService, snapshotter)

|

return cron.NewJobScheduler()

|

||||||

|

}

|

||||||

|

|

||||||

|

func loadSnapshotSystemSchedule(jobScheduler portainer.JobScheduler, snapshotter portainer.Snapshotter, scheduleService portainer.ScheduleService, endpointService portainer.EndpointService, settingsService portainer.SettingsService) error {

|

||||||

|

settings, err := settingsService.Settings()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

schedules, err := scheduleService.SchedulesByJobType(portainer.SnapshotJobType)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

var snapshotSchedule *portainer.Schedule

|

||||||

|

if len(schedules) == 0 {

|

||||||

|

snapshotJob := &portainer.SnapshotJob{}

|

||||||

|

snapshotSchedule = &portainer.Schedule{

|

||||||

|

ID: portainer.ScheduleID(scheduleService.GetNextIdentifier()),

|

||||||

|

Name: "system_snapshot",

|

||||||

|

CronExpression: "@every " + settings.SnapshotInterval,

|

||||||

|

Recurring: true,

|

||||||

|

JobType: portainer.SnapshotJobType,

|

||||||

|

SnapshotJob: snapshotJob,

|

||||||

|

Created: time.Now().Unix(),

|

||||||

|

}

|

||||||

|

} else {

|

||||||

|

snapshotSchedule = &schedules[0]

|

||||||

|

}

|

||||||

|

|

||||||

|

snapshotJobContext := cron.NewSnapshotJobContext(endpointService, snapshotter)

|

||||||

|

snapshotJobRunner := cron.NewSnapshotJobRunner(snapshotSchedule, snapshotJobContext)

|

||||||

|

|

||||||

|

err = jobScheduler.ScheduleJob(snapshotJobRunner)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

if len(schedules) == 0 {

|

||||||

|

return scheduleService.CreateSchedule(snapshotSchedule)

|

||||||

|

}

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func loadEndpointSyncSystemSchedule(jobScheduler portainer.JobScheduler, scheduleService portainer.ScheduleService, endpointService portainer.EndpointService, flags *portainer.CLIFlags) error {

|

||||||

|

if *flags.ExternalEndpoints == "" {

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

if *flags.ExternalEndpoints != "" {

|

|

||||||

log.Println("Using external endpoint definition. Endpoint management via the API will be disabled.")

|

log.Println("Using external endpoint definition. Endpoint management via the API will be disabled.")

|

||||||

err := jobScheduler.ScheduleEndpointSyncJob(*flags.ExternalEndpoints, *flags.SyncInterval)

|

|

||||||

|

schedules, err := scheduleService.SchedulesByJobType(portainer.EndpointSyncJobType)

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return nil, err

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

if len(schedules) != 0 {

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

|

endpointSyncJob := &portainer.EndpointSyncJob{}

|

||||||

|

|

||||||

|

endointSyncSchedule := &portainer.Schedule{

|

||||||

|

ID: portainer.ScheduleID(scheduleService.GetNextIdentifier()),

|

||||||

|

Name: "system_endpointsync",

|

||||||

|

CronExpression: "@every " + *flags.SyncInterval,

|

||||||

|

Recurring: true,

|

||||||

|

JobType: portainer.EndpointSyncJobType,

|

||||||

|

EndpointSyncJob: endpointSyncJob,

|

||||||

|

Created: time.Now().Unix(),

|

||||||

|

}

|

||||||

|

|

||||||

|

endpointSyncJobContext := cron.NewEndpointSyncJobContext(endpointService, *flags.ExternalEndpoints)

|

||||||

|

endpointSyncJobRunner := cron.NewEndpointSyncJobRunner(endointSyncSchedule, endpointSyncJobContext)

|

||||||

|

|

||||||

|

err = jobScheduler.ScheduleJob(endpointSyncJobRunner)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

return scheduleService.CreateSchedule(endointSyncSchedule)

|

||||||

|

}

|

||||||

|

|

||||||

|

func loadSchedulesFromDatabase(jobScheduler portainer.JobScheduler, jobService portainer.JobService, scheduleService portainer.ScheduleService, endpointService portainer.EndpointService, fileService portainer.FileService) error {

|

||||||

|

schedules, err := scheduleService.Schedules()

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

for _, schedule := range schedules {

|

||||||

|

|

||||||

|

if schedule.JobType == portainer.ScriptExecutionJobType {

|

||||||

|

jobContext := cron.NewScriptExecutionJobContext(jobService, endpointService, fileService)

|

||||||

|

jobRunner := cron.NewScriptExecutionJobRunner(&schedule, jobContext)

|

||||||

|

|

||||||

|

err = jobScheduler.ScheduleJob(jobRunner)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

if *flags.Snapshot {

|

return nil

|

||||||

err := jobScheduler.ScheduleSnapshotJob(*flags.SnapshotInterval)

|

|

||||||

if err != nil {

|

|

||||||

return nil, err

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

return jobScheduler, nil

|

|

||||||

}

|

}

|

||||||

|

|

||||||

func initStatus(endpointManagement, snapshot bool, flags *portainer.CLIFlags) *portainer.Status {

|

func initStatus(endpointManagement, snapshot bool, flags *portainer.CLIFlags) *portainer.Status {

|

||||||

|

|

@ -175,6 +262,7 @@ func initSettings(settingsService portainer.SettingsService, flags *portainer.CL

|

||||||

},

|

},

|

||||||

AllowBindMountsForRegularUsers: true,

|

AllowBindMountsForRegularUsers: true,

|

||||||

AllowPrivilegedModeForRegularUsers: true,

|

AllowPrivilegedModeForRegularUsers: true,

|

||||||

|

EnableHostManagementFeatures: false,

|

||||||

SnapshotInterval: *flags.SnapshotInterval,

|

SnapshotInterval: *flags.SnapshotInterval,

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

@ -383,6 +471,43 @@ func initEndpoint(flags *portainer.CLIFlags, endpointService portainer.EndpointS

|

||||||

return createUnsecuredEndpoint(*flags.EndpointURL, endpointService, snapshotter)

|

return createUnsecuredEndpoint(*flags.EndpointURL, endpointService, snapshotter)

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func initJobService(dockerClientFactory *docker.ClientFactory) portainer.JobService {

|

||||||

|

return docker.NewJobService(dockerClientFactory)

|

||||||

|

}

|

||||||

|

|

||||||

|

func initExtensionManager(fileService portainer.FileService, extensionService portainer.ExtensionService) (portainer.ExtensionManager, error) {

|

||||||

|

extensionManager := exec.NewExtensionManager(fileService, extensionService)

|

||||||

|

|

||||||

|

extensions, err := extensionService.Extensions()

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

|

||||||

|

for _, extension := range extensions {

|

||||||

|

err := extensionManager.EnableExtension(&extension, extension.License.LicenseKey)

|

||||||

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

return extensionManager, nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func terminateIfNoAdminCreated(userService portainer.UserService) {

|

||||||

|

timer1 := time.NewTimer(5 * time.Minute)

|

||||||

|

<-timer1.C

|

||||||

|

|

||||||

|

users, err := userService.UsersByRole(portainer.AdministratorRole)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatal(err)

|

||||||

|

}

|

||||||

|

|

||||||

|

if len(users) == 0 {

|

||||||

|

log.Fatal("No administrator account was created after 5 min. Shutting down the Portainer instance for security reasons.")

|

||||||

|

return

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

func main() {

|

func main() {

|

||||||

flags := initCLI()

|

flags := initCLI()

|

||||||

|

|

||||||

|

|

@ -406,16 +531,16 @@ func main() {

|

||||||

log.Fatal(err)

|

log.Fatal(err)

|

||||||

}

|

}

|

||||||

|

|

||||||

clientFactory := initClientFactory(digitalSignatureService)

|

extensionManager, err := initExtensionManager(fileService, store.ExtensionService)

|

||||||

|

|

||||||

snapshotter := initSnapshotter(clientFactory)

|

|

||||||

|

|

||||||

jobScheduler, err := initJobScheduler(store.EndpointService, snapshotter, flags)

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal(err)

|

log.Fatal(err)

|

||||||

}

|

}

|

||||||

|

|

||||||

jobScheduler.Start()

|

clientFactory := initClientFactory(digitalSignatureService)

|

||||||

|

|

||||||

|

jobService := initJobService(clientFactory)

|

||||||

|

|

||||||

|

snapshotter := initSnapshotter(clientFactory)

|

||||||

|

|

||||||

endpointManagement := true

|

endpointManagement := true

|

||||||

if *flags.ExternalEndpoints != "" {

|

if *flags.ExternalEndpoints != "" {

|

||||||

|

|

@ -439,6 +564,27 @@ func main() {

|

||||||

log.Fatal(err)

|

log.Fatal(err)

|

||||||

}

|

}

|

||||||

|

|

||||||

|

jobScheduler := initJobScheduler()

|

||||||

|

|

||||||

|

err = loadSchedulesFromDatabase(jobScheduler, jobService, store.ScheduleService, store.EndpointService, fileService)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatal(err)

|

||||||

|

}

|

||||||

|

|

||||||

|

err = loadEndpointSyncSystemSchedule(jobScheduler, store.ScheduleService, store.EndpointService, flags)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatal(err)

|

||||||

|

}

|

||||||

|

|

||||||

|

if *flags.Snapshot {

|

||||||

|

err = loadSnapshotSystemSchedule(jobScheduler, snapshotter, store.ScheduleService, store.EndpointService, store.SettingsService)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatal(err)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

jobScheduler.Start()

|

||||||

|

|

||||||

err = initDockerHub(store.DockerHubService)

|

err = initDockerHub(store.DockerHubService)

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal(err)

|

log.Fatal(err)

|

||||||

|

|

@ -487,6 +633,10 @@ func main() {

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

if !*flags.NoAuth {

|

||||||

|

go terminateIfNoAdminCreated(store.UserService)

|

||||||

|

}

|

||||||

|

|

||||||

var server portainer.Server = &http.Server{

|

var server portainer.Server = &http.Server{

|

||||||

Status: applicationStatus,

|

Status: applicationStatus,

|

||||||

BindAddress: *flags.Addr,

|

BindAddress: *flags.Addr,

|

||||||

|

|

@ -498,16 +648,19 @@ func main() {

|

||||||

TeamMembershipService: store.TeamMembershipService,

|

TeamMembershipService: store.TeamMembershipService,

|

||||||

EndpointService: store.EndpointService,

|

EndpointService: store.EndpointService,

|

||||||

EndpointGroupService: store.EndpointGroupService,

|

EndpointGroupService: store.EndpointGroupService,

|

||||||

|

ExtensionService: store.ExtensionService,

|

||||||

ResourceControlService: store.ResourceControlService,

|

ResourceControlService: store.ResourceControlService,

|

||||||

SettingsService: store.SettingsService,

|

SettingsService: store.SettingsService,

|

||||||

RegistryService: store.RegistryService,

|

RegistryService: store.RegistryService,

|

||||||

DockerHubService: store.DockerHubService,

|

DockerHubService: store.DockerHubService,

|

||||||

StackService: store.StackService,

|

StackService: store.StackService,

|

||||||

|

ScheduleService: store.ScheduleService,

|

||||||

TagService: store.TagService,

|

TagService: store.TagService,

|

||||||

TemplateService: store.TemplateService,

|

TemplateService: store.TemplateService,

|

||||||

WebhookService: store.WebhookService,

|

WebhookService: store.WebhookService,

|

||||||

SwarmStackManager: swarmStackManager,

|

SwarmStackManager: swarmStackManager,

|

||||||

ComposeStackManager: composeStackManager,

|

ComposeStackManager: composeStackManager,

|

||||||

|

ExtensionManager: extensionManager,

|

||||||

CryptoService: cryptoService,

|

CryptoService: cryptoService,

|

||||||

JWTService: jwtService,

|

JWTService: jwtService,

|

||||||

FileService: fileService,

|

FileService: fileService,

|

||||||

|

|

@ -520,6 +673,7 @@ func main() {

|

||||||

SSLCert: *flags.SSLCert,

|

SSLCert: *flags.SSLCert,

|

||||||

SSLKey: *flags.SSLKey,

|

SSLKey: *flags.SSLKey,

|

||||||

DockerClientFactory: clientFactory,

|

DockerClientFactory: clientFactory,

|

||||||

|

JobService: jobService,

|

||||||

}

|

}

|

||||||

|

|

||||||

log.Printf("Starting Portainer %s on %s", portainer.APIVersion, *flags.Addr)

|

log.Printf("Starting Portainer %s on %s", portainer.APIVersion, *flags.Addr)

|

||||||

|

|

|

||||||

|

|

@ -1,60 +0,0 @@

|

||||||

package cron

|

|

||||||

|

|

||||||

import (

|

|

||||||

"log"

|

|

||||||

|

|

||||||

"github.com/portainer/portainer"

|

|

||||||

)

|

|

||||||

|

|

||||||

type (

|

|

||||||

endpointSnapshotJob struct {

|

|

||||||

endpointService portainer.EndpointService

|

|

||||||

snapshotter portainer.Snapshotter

|

|

||||||

}

|

|

||||||

)

|

|

||||||

|

|

||||||

func newEndpointSnapshotJob(endpointService portainer.EndpointService, snapshotter portainer.Snapshotter) endpointSnapshotJob {

|

|

||||||

return endpointSnapshotJob{

|

|

||||||

endpointService: endpointService,

|

|

||||||

snapshotter: snapshotter,

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

func (job endpointSnapshotJob) Snapshot() error {

|

|

||||||

|

|

||||||

endpoints, err := job.endpointService.Endpoints()

|

|

||||||

if err != nil {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

|

|

||||||

for _, endpoint := range endpoints {

|

|

||||||

if endpoint.Type == portainer.AzureEnvironment {

|

|

||||||

continue

|

|

||||||

}

|

|

||||||

|

|

||||||

snapshot, err := job.snapshotter.CreateSnapshot(&endpoint)

|

|

||||||

endpoint.Status = portainer.EndpointStatusUp

|

|

||||||

if err != nil {

|

|

||||||

log.Printf("cron error: endpoint snapshot error (endpoint=%s, URL=%s) (err=%s)\n", endpoint.Name, endpoint.URL, err)

|

|

||||||

endpoint.Status = portainer.EndpointStatusDown

|

|

||||||

}

|

|

||||||

|

|

||||||

if snapshot != nil {

|

|

||||||

endpoint.Snapshots = []portainer.Snapshot{*snapshot}

|

|

||||||

}

|

|

||||||

|

|

||||||

err = job.endpointService.UpdateEndpoint(endpoint.ID, &endpoint)

|

|

||||||

if err != nil {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

return nil

|

|

||||||

}

|

|

||||||

|

|

||||||

func (job endpointSnapshotJob) Run() {

|

|

||||||

err := job.Snapshot()

|

|

||||||

if err != nil {

|

|

||||||

log.Printf("cron error: snapshot job error (err=%s)\n", err)

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

@ -9,19 +9,41 @@ import (

|

||||||

"github.com/portainer/portainer"

|

"github.com/portainer/portainer"

|

||||||

)

|

)

|

||||||

|

|

||||||

type (

|

// EndpointSyncJobRunner is used to run a EndpointSyncJob

|

||||||

endpointSyncJob struct {

|

type EndpointSyncJobRunner struct {

|

||||||

|

schedule *portainer.Schedule

|

||||||

|

context *EndpointSyncJobContext

|

||||||

|

}

|

||||||

|

|

||||||

|

// EndpointSyncJobContext represents the context of execution of a EndpointSyncJob

|

||||||

|

type EndpointSyncJobContext struct {

|

||||||

endpointService portainer.EndpointService

|

endpointService portainer.EndpointService

|

||||||

endpointFilePath string

|

endpointFilePath string

|

||||||

}

|

}

|

||||||

|

|

||||||

synchronization struct {

|

// NewEndpointSyncJobContext returns a new context that can be used to execute a EndpointSyncJob

|

||||||

|

func NewEndpointSyncJobContext(endpointService portainer.EndpointService, endpointFilePath string) *EndpointSyncJobContext {

|

||||||

|

return &EndpointSyncJobContext{

|

||||||

|

endpointService: endpointService,

|

||||||

|

endpointFilePath: endpointFilePath,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewEndpointSyncJobRunner returns a new runner that can be scheduled

|

||||||

|

func NewEndpointSyncJobRunner(schedule *portainer.Schedule, context *EndpointSyncJobContext) *EndpointSyncJobRunner {

|

||||||

|

return &EndpointSyncJobRunner{

|

||||||

|

schedule: schedule,

|

||||||

|

context: context,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

type synchronization struct {

|

||||||

endpointsToCreate []*portainer.Endpoint

|

endpointsToCreate []*portainer.Endpoint

|

||||||

endpointsToUpdate []*portainer.Endpoint

|

endpointsToUpdate []*portainer.Endpoint

|

||||||

endpointsToDelete []*portainer.Endpoint

|

endpointsToDelete []*portainer.Endpoint

|

||||||

}

|

}

|

||||||

|

|

||||||

fileEndpoint struct {

|

type fileEndpoint struct {

|

||||||

Name string `json:"Name"`

|

Name string `json:"Name"`

|

||||||

URL string `json:"URL"`

|

URL string `json:"URL"`

|

||||||

TLS bool `json:"TLS,omitempty"`

|

TLS bool `json:"TLS,omitempty"`

|

||||||

|

|

@ -29,24 +51,51 @@ type (

|

||||||

TLSCACert string `json:"TLSCACert,omitempty"`

|

TLSCACert string `json:"TLSCACert,omitempty"`

|

||||||

TLSCert string `json:"TLSCert,omitempty"`

|

TLSCert string `json:"TLSCert,omitempty"`

|

||||||

TLSKey string `json:"TLSKey,omitempty"`

|

TLSKey string `json:"TLSKey,omitempty"`

|

||||||

|

}

|

||||||

|

|

||||||

|

// GetSchedule returns the schedule associated to the runner

|

||||||

|

func (runner *EndpointSyncJobRunner) GetSchedule() *portainer.Schedule {

|

||||||

|

return runner.schedule

|

||||||

|

}

|

||||||

|

|

||||||

|

// Run triggers the execution of the endpoint synchronization process.

|

||||||

|

func (runner *EndpointSyncJobRunner) Run() {

|

||||||

|

data, err := ioutil.ReadFile(runner.context.endpointFilePath)

|

||||||

|

if endpointSyncError(err) {

|

||||||

|

return

|

||||||

}

|

}

|

||||||

)

|

|

||||||

|

|

||||||

const (

|

var fileEndpoints []fileEndpoint

|

||||||

// ErrEmptyEndpointArray is an error raised when the external endpoint source array is empty.

|

err = json.Unmarshal(data, &fileEndpoints)

|

||||||

ErrEmptyEndpointArray = portainer.Error("External endpoint source is empty")

|

if endpointSyncError(err) {

|

||||||

)

|

return

|

||||||

|

}

|

||||||

|

|

||||||

func newEndpointSyncJob(endpointFilePath string, endpointService portainer.EndpointService) endpointSyncJob {

|

if len(fileEndpoints) == 0 {

|

||||||

return endpointSyncJob{

|

log.Println("background job error (endpoint synchronization). External endpoint source is empty")

|

||||||

endpointService: endpointService,

|

return

|

||||||

endpointFilePath: endpointFilePath,

|

}

|

||||||

|

|

||||||

|

storedEndpoints, err := runner.context.endpointService.Endpoints()

|

||||||

|

if endpointSyncError(err) {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

convertedFileEndpoints := convertFileEndpoints(fileEndpoints)

|

||||||

|

|

||||||

|

sync := prepareSyncData(storedEndpoints, convertedFileEndpoints)

|

||||||

|

if sync.requireSync() {

|

||||||

|

err = runner.context.endpointService.Synchronize(sync.endpointsToCreate, sync.endpointsToUpdate, sync.endpointsToDelete)

|

||||||

|

if endpointSyncError(err) {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

log.Printf("Endpoint synchronization ended. [created: %v] [updated: %v] [deleted: %v]", len(sync.endpointsToCreate), len(sync.endpointsToUpdate), len(sync.endpointsToDelete))

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

func endpointSyncError(err error) bool {

|

func endpointSyncError(err error) bool {

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Printf("cron error: synchronization job error (err=%s)\n", err)

|

log.Printf("background job error (endpoint synchronization). Unable to synchronize endpoints (err=%s)\n", err)

|

||||||

return true

|

return true

|

||||||

}

|

}

|

||||||

return false

|

return false

|

||||||

|

|

@ -126,8 +175,7 @@ func (sync synchronization) requireSync() bool {

|

||||||

return false

|

return false

|

||||||

}

|

}

|

||||||

|

|

||||||

// TMP: endpointSyncJob method to access logger, should be generic

|

func prepareSyncData(storedEndpoints, fileEndpoints []portainer.Endpoint) *synchronization {

|

||||||

func (job endpointSyncJob) prepareSyncData(storedEndpoints, fileEndpoints []portainer.Endpoint) *synchronization {

|

|

||||||

endpointsToCreate := make([]*portainer.Endpoint, 0)

|

endpointsToCreate := make([]*portainer.Endpoint, 0)

|

||||||

endpointsToUpdate := make([]*portainer.Endpoint, 0)

|

endpointsToUpdate := make([]*portainer.Endpoint, 0)

|

||||||

endpointsToDelete := make([]*portainer.Endpoint, 0)

|

endpointsToDelete := make([]*portainer.Endpoint, 0)

|

||||||

|

|

@ -164,43 +212,3 @@ func (job endpointSyncJob) prepareSyncData(storedEndpoints, fileEndpoints []port

|

||||||

endpointsToDelete: endpointsToDelete,

|

endpointsToDelete: endpointsToDelete,

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

func (job endpointSyncJob) Sync() error {

|

|

||||||

data, err := ioutil.ReadFile(job.endpointFilePath)

|

|

||||||

if endpointSyncError(err) {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

|

|

||||||

var fileEndpoints []fileEndpoint

|

|

||||||

err = json.Unmarshal(data, &fileEndpoints)

|

|

||||||

if endpointSyncError(err) {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

|

|

||||||

if len(fileEndpoints) == 0 {

|

|

||||||

return ErrEmptyEndpointArray

|

|

||||||

}

|

|

||||||

|

|

||||||

storedEndpoints, err := job.endpointService.Endpoints()

|

|

||||||

if endpointSyncError(err) {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

|

|

||||||

convertedFileEndpoints := convertFileEndpoints(fileEndpoints)

|

|

||||||

|

|

||||||

sync := job.prepareSyncData(storedEndpoints, convertedFileEndpoints)

|

|

||||||

if sync.requireSync() {

|

|

||||||

err = job.endpointService.Synchronize(sync.endpointsToCreate, sync.endpointsToUpdate, sync.endpointsToDelete)

|

|

||||||

if endpointSyncError(err) {

|

|

||||||

return err

|

|

||||||

}

|

|

||||||

log.Printf("Endpoint synchronization ended. [created: %v] [updated: %v] [deleted: %v]", len(sync.endpointsToCreate), len(sync.endpointsToUpdate), len(sync.endpointsToDelete))

|

|

||||||

}

|

|

||||||

return nil

|

|

||||||

}

|

|

||||||

|

|

||||||

func (job endpointSyncJob) Run() {

|

|

||||||

log.Println("cron: synchronization job started")

|

|

||||||

err := job.Sync()

|

|

||||||

endpointSyncError(err)

|

|

||||||

}

|

|

||||||

|

|

|

||||||

96

api/cron/job_script_execution.go

Normal file

96

api/cron/job_script_execution.go

Normal file

|

|

@ -0,0 +1,96 @@

|

||||||

|

package cron

|

||||||

|

|

||||||

|

import (

|

||||||

|

"log"

|

||||||

|

"time"

|

||||||

|

|

||||||

|

"github.com/portainer/portainer"

|

||||||

|

)

|

||||||

|

|

||||||

|

// ScriptExecutionJobRunner is used to run a ScriptExecutionJob

|

||||||

|

type ScriptExecutionJobRunner struct {

|

||||||

|

schedule *portainer.Schedule

|

||||||

|

context *ScriptExecutionJobContext

|

||||||

|

executedOnce bool

|

||||||

|

}

|

||||||

|

|

||||||

|

// ScriptExecutionJobContext represents the context of execution of a ScriptExecutionJob

|

||||||

|

type ScriptExecutionJobContext struct {

|

||||||

|

jobService portainer.JobService

|

||||||

|

endpointService portainer.EndpointService

|

||||||

|

fileService portainer.FileService

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewScriptExecutionJobContext returns a new context that can be used to execute a ScriptExecutionJob

|

||||||

|

func NewScriptExecutionJobContext(jobService portainer.JobService, endpointService portainer.EndpointService, fileService portainer.FileService) *ScriptExecutionJobContext {

|

||||||

|

return &ScriptExecutionJobContext{

|

||||||

|

jobService: jobService,

|

||||||

|

endpointService: endpointService,

|

||||||

|

fileService: fileService,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewScriptExecutionJobRunner returns a new runner that can be scheduled

|

||||||

|

func NewScriptExecutionJobRunner(schedule *portainer.Schedule, context *ScriptExecutionJobContext) *ScriptExecutionJobRunner {

|

||||||

|

return &ScriptExecutionJobRunner{

|

||||||

|

schedule: schedule,

|

||||||

|

context: context,

|

||||||

|

executedOnce: false,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// Run triggers the execution of the job.

|

||||||

|

// It will iterate through all the endpoints specified in the context to

|

||||||

|

// execute the script associated to the job.

|

||||||

|

func (runner *ScriptExecutionJobRunner) Run() {

|

||||||

|

if !runner.schedule.Recurring && runner.executedOnce {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

runner.executedOnce = true

|

||||||

|

|

||||||

|

scriptFile, err := runner.context.fileService.GetFileContent(runner.schedule.ScriptExecutionJob.ScriptPath)

|

||||||

|

if err != nil {

|

||||||

|

log.Printf("scheduled job error (script execution). Unable to retrieve script file (err=%s)\n", err)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

targets := make([]*portainer.Endpoint, 0)

|

||||||

|

for _, endpointID := range runner.schedule.ScriptExecutionJob.Endpoints {

|

||||||

|

endpoint, err := runner.context.endpointService.Endpoint(endpointID)

|

||||||

|

if err != nil {

|

||||||

|

log.Printf("scheduled job error (script execution). Unable to retrieve information about endpoint (id=%d) (err=%s)\n", endpointID, err)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

targets = append(targets, endpoint)

|

||||||

|

}

|

||||||

|

|

||||||

|

runner.executeAndRetry(targets, scriptFile, 0)

|

||||||

|

}

|

||||||

|

|

||||||

|

func (runner *ScriptExecutionJobRunner) executeAndRetry(endpoints []*portainer.Endpoint, script []byte, retryCount int) {

|

||||||

|

retryTargets := make([]*portainer.Endpoint, 0)

|

||||||

|

|

||||||

|

for _, endpoint := range endpoints {

|

||||||

|

err := runner.context.jobService.ExecuteScript(endpoint, "", runner.schedule.ScriptExecutionJob.Image, script, runner.schedule)

|

||||||

|

if err == portainer.ErrUnableToPingEndpoint {

|

||||||

|

retryTargets = append(retryTargets, endpoint)

|

||||||

|

} else if err != nil {

|

||||||

|

log.Printf("scheduled job error (script execution). Unable to execute script (endpoint=%s) (err=%s)\n", endpoint.Name, err)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

retryCount++

|

||||||

|

if retryCount >= runner.schedule.ScriptExecutionJob.RetryCount {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

time.Sleep(time.Duration(runner.schedule.ScriptExecutionJob.RetryInterval) * time.Second)

|

||||||

|

|

||||||

|

runner.executeAndRetry(retryTargets, script, retryCount)

|

||||||

|

}

|

||||||

|

|

||||||

|

// GetSchedule returns the schedule associated to the runner

|

||||||

|

func (runner *ScriptExecutionJobRunner) GetSchedule() *portainer.Schedule {

|

||||||

|

return runner.schedule

|

||||||

|

}

|

||||||

85

api/cron/job_snapshot.go

Normal file

85

api/cron/job_snapshot.go

Normal file

|

|

@ -0,0 +1,85 @@

|

||||||

|

package cron

|

||||||

|

|

||||||

|

import (

|

||||||

|

"log"

|

||||||

|

|

||||||

|

"github.com/portainer/portainer"

|

||||||

|

)

|

||||||

|

|

||||||

|

// SnapshotJobRunner is used to run a SnapshotJob

|

||||||

|

type SnapshotJobRunner struct {

|

||||||

|

schedule *portainer.Schedule

|

||||||

|

context *SnapshotJobContext

|

||||||

|

}

|

||||||

|

|

||||||

|

// SnapshotJobContext represents the context of execution of a SnapshotJob

|

||||||

|

type SnapshotJobContext struct {

|

||||||

|

endpointService portainer.EndpointService

|

||||||

|

snapshotter portainer.Snapshotter

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewSnapshotJobContext returns a new context that can be used to execute a SnapshotJob

|

||||||

|

func NewSnapshotJobContext(endpointService portainer.EndpointService, snapshotter portainer.Snapshotter) *SnapshotJobContext {

|

||||||

|

return &SnapshotJobContext{

|

||||||

|

endpointService: endpointService,

|

||||||

|

snapshotter: snapshotter,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// NewSnapshotJobRunner returns a new runner that can be scheduled

|

||||||

|

func NewSnapshotJobRunner(schedule *portainer.Schedule, context *SnapshotJobContext) *SnapshotJobRunner {

|

||||||

|

return &SnapshotJobRunner{

|

||||||

|

schedule: schedule,

|

||||||

|

context: context,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// GetSchedule returns the schedule associated to the runner

|

||||||

|

func (runner *SnapshotJobRunner) GetSchedule() *portainer.Schedule {

|

||||||

|

return runner.schedule

|

||||||

|

}

|

||||||

|

|

||||||

|

// Run triggers the execution of the schedule.

|

||||||

|

// It will iterate through all the endpoints available in the database to

|

||||||

|

// create a snapshot of each one of them.

|

||||||

|

// As a snapshot can be a long process, to avoid any concurrency issue we

|

||||||

|

// retrieve the latest version of the endpoint right after a snapshot.

|

||||||

|

func (runner *SnapshotJobRunner) Run() {

|

||||||

|

go func() {

|

||||||

|

endpoints, err := runner.context.endpointService.Endpoints()

|

||||||

|

if err != nil {

|

||||||

|

log.Printf("background schedule error (endpoint snapshot). Unable to retrieve endpoint list (err=%s)\n", err)

|

||||||