mirror of

https://github.com/documize/community.git

synced 2025-08-08 06:55:28 +02:00

Compare commits

526 commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

efb092ef8f | ||

|

|

3fc0a15f87 | ||

|

|

c841c85478 | ||

|

|

2dae03332b | ||

|

|

44b1f263cd | ||

|

|

982e16737e | ||

|

|

f641e42434 | ||

|

|

8895db56af | ||

|

|

acb59e1b43 | ||

|

|

f2ba294be8 | ||

|

|

69940cb7f1 | ||

|

|

6bfdda7178 | ||

|

|

027fdf108c | ||

|

|

1f12df76aa | ||

|

|

d811b88896 | ||

|

|

20fb853907 | ||

|

|

599c53a971 | ||

|

|

1f462ed4f7 | ||

|

|

9f122fa79b | ||

|

|

4210caca48 | ||

|

|

c62fa4612b | ||

|

|

510e1bd0bd | ||

|

|

a32510b8e6 | ||

|

|

589f3f581f | ||

|

|

20bba4cd7e | ||

|

|

cbf5f4be7d | ||

|

|

dc63639c99 | ||

|

|

26f435bdc9 | ||

|

|

a8a82963fa | ||

|

|

ab8582e807 | ||

|

|

4fa0566274 | ||

|

|

f4b45d2aa7 | ||

|

|

1abc5d3e52 | ||

|

|

6e463ff2f4 | ||

|

|

f80b3f3d10 | ||

|

|

6c218cf087 | ||

|

|

3d1c8a6c54 | ||

|

|

576fd5e604 | ||

|

|

62407a28b4 | ||

|

|

0adf6d5dc8 | ||

|

|

15f8a64c86 | ||

|

|

95c67acaa0 | ||

|

|

d8f66b5ffb | ||

|

|

c051e81a99 | ||

|

|

1d86b98949 | ||

|

|

0a1cc86907 | ||

|

|

a49869d35d | ||

|

|

848afd3263 | ||

|

|

b9cb99e3bb | ||

|

|

64261ffcf5 | ||

|

|

0030418707 | ||

|

|

0f91ee518e | ||

|

|

5de1b7a92e | ||

|

|

a2524f785e | ||

|

|

f16b9f3810 | ||

|

|

1c09771c33 | ||

|

|

13fc5b5015 | ||

|

|

76c777acc1 | ||

|

|

ea9ff78411 | ||

|

|

4a9dd47894 | ||

|

|

7565779ef1 | ||

|

|

c07e7b6afc | ||

|

|

88bdafcb1b | ||

|

|

5a3cb1b226 | ||

|

|

6ee8e6c7b4 | ||

|

|

599c464d2d | ||

|

|

ae77fa2275 | ||

|

|

610367aac5 | ||

|

|

be2c2a7a2c | ||

|

|

0d28b7ee79 | ||

|

|

aa8b473018 | ||

|

|

6993dc678f | ||

|

|

e0e3f0c141 | ||

|

|

4c031fe7e4 | ||

|

|

e4025bee42 | ||

|

|

876775b395 | ||

|

|

828c01d189 | ||

|

|

a69bcc0af6 | ||

|

|

5ec911dce2 | ||

|

|

ce07d4d147 | ||

|

|

f3ef83162e | ||

|

|

f1a01ec195 | ||

|

|

01e53c3d27 | ||

|

|

2cf21a7bea | ||

|

|

d4c606760c | ||

|

|

9343d77b26 | ||

|

|

30aa8aadb6 | ||

|

|

29bc2677a8 | ||

|

|

d9827df440 | ||

|

|

cfd7ebd2bf | ||

|

|

b510615691 | ||

|

|

e8641405cf | ||

|

|

209f1b667e | ||

|

|

e70019d73b | ||

|

|

dc26f063c8 | ||

|

|

68d067ef7b | ||

|

|

0d52f434d5 | ||

|

|

ce22c78dac | ||

|

|

f976ea36f6 | ||

|

|

1734963693 | ||

|

|

247a2b2c03 | ||

|

|

38a790dd04 | ||

|

|

b77b4abdc2 | ||

|

|

6b498a74c6 | ||

|

|

f6dd872782 | ||

|

|

9473ecba9a | ||

|

|

1a909dd046 | ||

|

|

607a2d5797 | ||

|

|

037dfc40cd | ||

|

|

65348eee28 | ||

|

|

78932fb8c7 | ||

|

|

6c8b10753d | ||

|

|

e56263564c | ||

|

|

22b6a4fb78 | ||

|

|

7e26c003d6 | ||

|

|

e81cbad385 | ||

|

|

4494ace0a2 | ||

|

|

23abcf1585 | ||

|

|

67070c3bfc | ||

|

|

77c767a351 | ||

|

|

17162ce336 | ||

|

|

7255eb4f56 | ||

|

|

df534f72fa | ||

|

|

f4a1350a41 | ||

|

|

cd15c393fe | ||

|

|

7f66977ac1 | ||

|

|

33a9cbb5b0 | ||

|

|

716343680a | ||

|

|

5db5f4d63b | ||

|

|

3d3d50762e | ||

|

|

20c9168140 | ||

|

|

ce9c635fb4 | ||

|

|

f735ae1278 | ||

|

|

bca7794c00 | ||

|

|

371706fb49 | ||

|

|

a236cbb01c | ||

|

|

93b6f26365 | ||

|

|

5e687f5ef4 | ||

|

|

97c4c927ac | ||

|

|

4885a1b380 | ||

|

|

e0805d7131 | ||

|

|

6d735e8579 | ||

|

|

073ef81e80 | ||

|

|

38c9a94a9c | ||

|

|

59dc6ea991 | ||

|

|

4ab48cc67d | ||

|

|

53297f7627 | ||

|

|

4ed2b3902c | ||

|

|

6968581e5b | ||

|

|

c09a116e56 | ||

|

|

7cf672646a | ||

|

|

29447a2784 | ||

|

|

479d03ba70 | ||

|

|

a7dac6911c | ||

|

|

08f21346c1 | ||

|

|

ce4f62d346 | ||

|

|

8a25509019 | ||

|

|

59c929d251 | ||

|

|

245c538990 | ||

|

|

32a9528e6d | ||

|

|

a15f0c8eb6 | ||

|

|

eb9fbd25b9 | ||

|

|

dbef758035 | ||

|

|

dea25a2b85 | ||

|

|

fcf38d8af9 | ||

|

|

ce93a5e623 | ||

|

|

8df1cc73b0 | ||

|

|

53ec7c9274 | ||

|

|

cfe85248ce | ||

|

|

30c31a1ba7 | ||

|

|

a97b6b22d9 | ||

|

|

e985c5f808 | ||

|

|

4b89f3b1c2 | ||

|

|

707dc1e052 | ||

|

|

88211739f0 | ||

|

|

6b3cdb5033 | ||

|

|

45f216b8a1 | ||

|

|

c31c130ffd | ||

|

|

5d5e212a6b | ||

|

|

8fa5569ae5 | ||

|

|

8976bf817b | ||

|

|

0c3fed2b18 | ||

|

|

60dfb54d54 | ||

|

|

c6863201b3 | ||

|

|

45567e274a | ||

|

|

dff4c6929b | ||

|

|

eea8db9288 | ||

|

|

e19c4ad18a | ||

|

|

989b7cd62c | ||

|

|

df8f650319 | ||

|

|

565a063231 | ||

|

|

cb46f34503 | ||

|

|

470e2d3ecf | ||

|

|

cddba799f8 | ||

|

|

05df22ed4a | ||

|

|

a5dfa6ee39 | ||

|

|

780ce2df61 | ||

|

|

9f28e1bff2 | ||

|

|

8ae94295a2 | ||

|

|

adb7b4d7bf | ||

|

|

66fcb77d8b | ||

|

|

972413110f | ||

|

|

a0a166136e | ||

|

|

30d12ba756 | ||

|

|

06bf9efcfc | ||

|

|

9ed8f79315 | ||

|

|

73e8c7a278 | ||

|

|

806efd7eac | ||

|

|

724f3c88b3 | ||

|

|

4a7d915ebb | ||

|

|

c7413da943 | ||

|

|

4e0218f5ea | ||

|

|

4fe022aa0c | ||

|

|

aaa8c3282d | ||

|

|

5e022dd0b8 | ||

|

|

bbca180298 | ||

|

|

cdc7489659 | ||

|

|

ab95fcc64d | ||

|

|

9bee58057e | ||

|

|

bda9719ecb | ||

|

|

fbd4b17c15 | ||

|

|

c689379f92 | ||

|

|

d1774b42bd | ||

|

|

8ac35a6b74 | ||

|

|

813f270a9d | ||

|

|

e014f5b5c1 | ||

|

|

2b66d0096a | ||

|

|

50f47f61a5 | ||

|

|

d26ecdc12f | ||

|

|

1a89201bd9 | ||

|

|

accf0a2c63 | ||

|

|

cafa3ceed0 | ||

|

|

2b3e9dfbc9 | ||

|

|

1c1ebee15a | ||

|

|

6ba4ca9c16 | ||

|

|

5aaa9f874d | ||

|

|

51a25adbdb | ||

|

|

9d025c3f71 | ||

|

|

a4384210d4 | ||

|

|

6882491201 | ||

|

|

d4edcb8b2c | ||

|

|

f117e91bcb | ||

|

|

5c1ad25dc9 | ||

|

|

8970a21b58 | ||

|

|

0e6f2f1f5e | ||

|

|

7fc74be7cd | ||

|

|

faeadb2bbb | ||

|

|

be50bf9f14 | ||

|

|

60ef205948 | ||

|

|

7ae801554d | ||

|

|

441efd42e9 | ||

|

|

a19ba46f7a | ||

|

|

ad361c22ba | ||

|

|

7954f4b976 | ||

|

|

2d105f2154 | ||

|

|

811e239baf | ||

|

|

c7e71173ea | ||

|

|

8fa8a3657c | ||

|

|

a64a219ce8 | ||

|

|

d7a484a936 | ||

|

|

017b19141c | ||

|

|

39f457e90e | ||

|

|

30d3e6f82e | ||

|

|

8c2bed283f | ||

|

|

a3867c617a | ||

|

|

28424e7e4b | ||

|

|

7c70274f5e | ||

|

|

ef5b5cdb32 | ||

|

|

ccd756aca0 | ||

|

|

444b89e425 | ||

|

|

3d0f17386b | ||

|

|

513fd9f994 | ||

|

|

5cef58eeba | ||

|

|

fad1de2e41 | ||

|

|

6b723568d3 | ||

|

|

00889f0e0e | ||

|

|

6629d76453 | ||

|

|

74300b009b | ||

|

|

5004e5a85e | ||

|

|

0524a0c74c | ||

|

|

b826852137 | ||

|

|

2c164a135a | ||

|

|

44febcc25c | ||

|

|

66e11cefbc | ||

|

|

5e9eeb5bf9 | ||

|

|

5b7610d726 | ||

|

|

0419f3b7b3 | ||

|

|

5b72da037c | ||

|

|

d14e8a3ff6 | ||

|

|

9a3d2c3c28 | ||

|

|

3b76e10ee0 | ||

|

|

29d7307537 | ||

|

|

96e5812fc0 | ||

|

|

c35eb16fc5 | ||

|

|

9dd78ca9be | ||

|

|

891ba07db8 | ||

|

|

2ee9a9ff46 | ||

|

|

399c36611f | ||

|

|

fbb73560c0 | ||

|

|

15e687841f | ||

|

|

0a10087160 | ||

|

|

9d0d4a7861 | ||

|

|

fc60a5917e | ||

|

|

285a01508b | ||

|

|

4f248bf018 | ||

|

|

32dbab826d | ||

|

|

b6e1543b7f | ||

|

|

f8bb879a70 | ||

|

|

20366e6776 | ||

|

|

ffacf17c5f | ||

|

|

bfe4c5d768 | ||

|

|

0f3a618140 | ||

|

|

826f6d96a6 | ||

|

|

6a9fa0140a | ||

|

|

24619c6a58 | ||

|

|

ebc8214049 | ||

|

|

71c1def5c7 | ||

|

|

fded0014a3 | ||

|

|

041091504f | ||

|

|

8c2df6178d | ||

|

|

02d478c6dd | ||

|

|

8c99977fc9 | ||

|

|

9d6b6fec23 | ||

|

|

4e0e3b5101 | ||

|

|

e219c97a6b | ||

|

|

7485f2cef7 | ||

|

|

627195aae7 | ||

|

|

f39be2a594 | ||

|

|

444b4fd1f7 | ||

|

|

b31f330c41 | ||

|

|

69077ce419 | ||

|

|

201d2a339c | ||

|

|

326019d655 | ||

|

|

264c25cfe0 | ||

|

|

595301db64 | ||

|

|

d6432afdad | ||

|

|

9c36241b58 | ||

|

|

c538fc9eb1 | ||

|

|

f3df43efe0 | ||

|

|

d04becc1a3 | ||

|

|

3621e2fb79 | ||

|

|

411f64c359 | ||

|

|

ae923e7df1 | ||

|

|

bfe5262cb5 | ||

|

|

80f0876b51 | ||

|

|

b2cd375936 | ||

|

|

243a170071 | ||

|

|

fb3f2cc24b | ||

|

|

946c433018 | ||

|

|

4d2f30711c | ||

|

|

887c999a1e | ||

|

|

b256bf2e9d | ||

|

|

8f4cd755de | ||

|

|

64612b825a | ||

|

|

216866a953 | ||

|

|

14820df165 | ||

|

|

f7a738ad84 | ||

|

|

df2775f8a4 | ||

|

|

a710839f69 | ||

|

|

2a45c82b46 | ||

|

|

7eec01811a | ||

|

|

ef5e4db298 | ||

|

|

b1cb0ed155 | ||

|

|

78fd14b3d3 | ||

|

|

82ddcc057d | ||

|

|

a90c5834fa | ||

|

|

ec8d5c78e2 | ||

|

|

b75969ae90 | ||

|

|

9b82f42cc1 | ||

|

|

b8fee6b962 | ||

|

|

8baad7e2f0 | ||

|

|

99a5418dba | ||

|

|

acd3dd63b5 | ||

|

|

96872990f9 | ||

|

|

c59a467cdb | ||

|

|

715c31a1da | ||

|

|

40237344e2 | ||

|

|

91a3c59cd2 | ||

|

|

fe7548cd97 | ||

|

|

ca4a9a74ee | ||

|

|

1e1cbdd843 | ||

|

|

c8b82c85fe | ||

|

|

bae7909801 | ||

|

|

c870547fa1 | ||

|

|

c0876e7be8 | ||

|

|

cd9f681adf | ||

|

|

0240f98eb0 | ||

|

|

c49707d160 | ||

|

|

c65eb97948 | ||

|

|

bc9dab72f2 | ||

|

|

6ae9414361 | ||

|

|

e37782e5b7 | ||

|

|

2bbeaf91a0 | ||

|

|

de273a38ed | ||

|

|

08794f8d5f | ||

|

|

14f313a836 | ||

|

|

62c3cd03ad | ||

|

|

bce1c1b166 | ||

|

|

758bf07272 | ||

|

|

479508e436 | ||

|

|

49bf4eeaa0 | ||

|

|

1c45aef461 | ||

|

|

a988bc0c3c | ||

|

|

91ec2f89d8 | ||

|

|

2477c36f11 | ||

|

|

e2a3962092 | ||

|

|

8ecbb9cdee | ||

|

|

f738077f5a | ||

|

|

f17de58fff | ||

|

|

40a0d77f93 | ||

|

|

072ca0dfed | ||

|

|

b054addb9c | ||

|

|

e59e1f060a | ||

|

|

faf9a555d2 | ||

|

|

8ab3cbe7e8 | ||

|

|

86d25b2191 | ||

|

|

9a53958c8f | ||

|

|

b971c52469 | ||

|

|

34d1639899 | ||

|

|

1fefdaec9f | ||

|

|

8f1bc8ce1f | ||

|

|

d151555597 | ||

|

|

4255291223 | ||

|

|

86a4e82c12 | ||

|

|

daa9e08ab4 | ||

|

|

c666e68c2b | ||

|

|

e6e3ed71ac | ||

|

|

89a28ad22f | ||

|

|

8e4ad6422b | ||

|

|

4de83beba4 | ||

|

|

10c57a0ae1 | ||

|

|

728789195c | ||

|

|

b5cd378302 | ||

|

|

7fde947a52 | ||

|

|

61d0086337 | ||

|

|

166aeba09b | ||

|

|

c0ed3c3d04 | ||

|

|

ab5314d5e1 | ||

|

|

51a0e1127e | ||

|

|

e10d04d22e | ||

|

|

2fffb7869e | ||

|

|

82ed36478b | ||

|

|

1da49974cb | ||

|

|

9e3eac19aa | ||

|

|

92696c5181 | ||

|

|

eecf316d50 | ||

|

|

fa383a58ff | ||

|

|

b5a5cfd697 | ||

|

|

2ddd7ada9b | ||

|

|

8515a77403 | ||

|

|

f8d97d2a56 | ||

|

|

e98f7b9218 | ||

|

|

a41f43c380 | ||

|

|

64403c402b | ||

|

|

9ec858286f | ||

|

|

80aab3ce99 | ||

|

|

deb579d8ad | ||

|

|

e6335dd58c | ||

|

|

e1a8f8b724 | ||

|

|

e1001bb11e | ||

|

|

dbee77df56 | ||

|

|

9c2bff0374 | ||

|

|

651cbb1dfe | ||

|

|

a98c3a0fe2 | ||

|

|

a08b583b22 | ||

|

|

6738d2c9e1 | ||

|

|

441001fffe | ||

|

|

a4e07fbf7f | ||

|

|

9a41e82aa3 | ||

|

|

b89a297c70 | ||

|

|

ca1e281775 | ||

|

|

c5fc0f93e0 | ||

|

|

217e8a3a29 | ||

|

|

1854998c80 | ||

|

|

f4a371357e | ||

|

|

1d00f8ac6e | ||

|

|

0985dbf5b6 | ||

|

|

b2fcad649e | ||

|

|

f062005946 | ||

|

|

36d7136210 | ||

|

|

0bfde82040 | ||

|

|

e6e5f75ee7 | ||

|

|

eb9501014d | ||

|

|

e35639502d | ||

|

|

3db4981181 | ||

|

|

3206eb4176 | ||

|

|

576e1beade | ||

|

|

54eefc5132 | ||

|

|

fbb1e334f8 | ||

|

|

bb73655327 | ||

|

|

8c2febd636 | ||

|

|

395008d06d | ||

|

|

d009e4ed2a | ||

|

|

54bf258c61 | ||

|

|

8332e8a03d | ||

|

|

a6f8be2928 | ||

|

|

cbd9fddcfe | ||

|

|

4013b5ca03 | ||

|

|

24b1326c31 | ||

|

|

566807bc14 | ||

|

|

df8e843bf5 | ||

|

|

4d0de69489 | ||

|

|

25c247e99b | ||

|

|

ed99b0c9f3 | ||

|

|

8b0bb456d9 | ||

|

|

e438542cab | ||

|

|

553c17181e | ||

|

|

9b06ddecb5 | ||

|

|

3fd1d793a3 | ||

|

|

fc17ea5225 | ||

|

|

f47f09661f | ||

|

|

4b7d4cf872 | ||

|

|

c108d0eb30 | ||

|

|

1f5221ffa0 | ||

|

|

a888b12ad1 | ||

|

|

2510972a83 | ||

|

|

560f786b8b | ||

|

|

af641b93f1 | ||

|

|

f4fa63359f | ||

|

|

f828583b49 | ||

|

|

af9bc25660 | ||

|

|

66003dac21 | ||

|

|

d4f6694933 | ||

|

|

27030a0dc2 | ||

|

|

43f515a1f9 | ||

|

|

9aaea9492a |

1803 changed files with 388215 additions and 104888 deletions

|

|

@ -1,3 +1,6 @@

|

|||

.DS_Store

|

||||

.git

|

||||

bin

|

||||

.idea

|

||||

selfcert

|

||||

gui/dist-prod

|

||||

|

|

@ -1,9 +0,0 @@

|

|||

root = true

|

||||

|

||||

[*]

|

||||

indent_style = space

|

||||

end_of_line = lf

|

||||

charset = utf-8

|

||||

trim_trailing_whitespace = true

|

||||

indent_size = 4

|

||||

insert_final_newline = true

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

|

|

@ -18,6 +18,7 @@ _convert

|

|||

bin/*

|

||||

dist/*

|

||||

embed/bindata/*

|

||||

edition/static/*

|

||||

gui/dist/*

|

||||

gui/dist-prod/*

|

||||

|

||||

|

|

@ -52,7 +53,6 @@ _testmain.go

|

|||

node_modules

|

||||

|

||||

# Misc.

|

||||

build

|

||||

plugin-msword/plugin-msword

|

||||

plugin-msword/plugin-msword-osx

|

||||

npm-debug.log

|

||||

|

|

@ -70,3 +70,4 @@ bower.json.ember-try

|

|||

package.json.ember-try

|

||||

embed/bindata_assetfs.go

|

||||

dmz-backup*.zip

|

||||

*.conf

|

||||

|

|

|

|||

|

|

@ -1,62 +0,0 @@

|

|||

{

|

||||

"css": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true,

|

||||

"preserve_newlines": true,

|

||||

"max_preserve_newlines": 2,

|

||||

"newline_between_rules": true,

|

||||

"selector_separator_newlines": true

|

||||

},

|

||||

"scss": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true,

|

||||

"preserve_newlines": true,

|

||||

"max_preserve_newlines": 2,

|

||||

"newline_between_rules": true,

|

||||

"selector_separator_newlines": true

|

||||

},

|

||||

"html": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true,

|

||||

"preserve_newlines": true,

|

||||

"max_preserve_newlines": 2,

|

||||

"wrap_line_length": 0,

|

||||

"indent_handlebars": true,

|

||||

"indent_inner_html": false,

|

||||

"indent_scripts": "keep"

|

||||

},

|

||||

"hbs": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true,

|

||||

"max_preserve_newlines": 2,

|

||||

"preserve_newlines": true,

|

||||

"wrap_line_length": 0

|

||||

},

|

||||

"js": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true,

|

||||

"preserve_newlines": true,

|

||||

"wrap_line_length": 0,

|

||||

"break_chained_methods": false,

|

||||

"max_preserve_newlines": 2,

|

||||

"jslint_happy": true,

|

||||

"brace_style": "collapse-preserve-inline",

|

||||

"keep_function_indentation": false,

|

||||

"space_after_anon_function": false,

|

||||

"space_before_anon_function": false,

|

||||

"space_before_conditional": true,

|

||||

"space_in_empty_paren": false,

|

||||

"space_before_func_paren": false,

|

||||

"space_in_paren": false

|

||||

},

|

||||

"sql": {

|

||||

"indent_size": 4,

|

||||

"indent_level": 0,

|

||||

"indent_with_tabs": true

|

||||

}

|

||||

}

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

gui/public/tinymce/**

|

||||

gui/public/tinymce/

|

||||

gui/public/tinymce

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

{

|

||||

"esversion":6

|

||||

}

|

||||

32

Dockerfile

Normal file

32

Dockerfile

Normal file

|

|

@ -0,0 +1,32 @@

|

|||

FROM node:16-alpine as frontbuilder

|

||||

WORKDIR /go/src/github.com/documize/community/gui

|

||||

COPY ./gui /go/src/github.com/documize/community/gui

|

||||

RUN npm --network-timeout=100000 install

|

||||

RUN npm run build -- --environment=production --output-path dist-prod --suppress-sizes true

|

||||

|

||||

FROM golang:1.21-alpine as builder

|

||||

WORKDIR /go/src/github.com/documize/community

|

||||

COPY . /go/src/github.com/documize/community

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/assets /go/src/github.com/documize/community/edition/static/public/assets

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/codemirror /go/src/github.com/documize/community/edition/static/public/codemirror

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/prism /go/src/github.com/documize/community/edition/static/public/prism

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/sections /go/src/github.com/documize/community/edition/static/public/sections

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/tinymce /go/src/github.com/documize/community/edition/static/public/tinymce

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/pdfjs /go/src/github.com/documize/community/edition/static/public/pdfjs

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/i18n /go/src/github.com/documize/community/edition/static/public/i18n

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/*.* /go/src/github.com/documize/community/edition/static/

|

||||

COPY --from=frontbuilder /go/src/github.com/documize/community/gui/dist-prod/i18n/*.json /go/src/github.com/documize/community/edition/static/i18n/

|

||||

COPY domain/mail/*.html /go/src/github.com/documize/community/edition/static/mail/

|

||||

COPY core/database/templates/*.html /go/src/github.com/documize/community/edition/static/

|

||||

COPY core/database/scripts/mysql/*.sql /go/src/github.com/documize/community/edition/static/scripts/mysql/

|

||||

COPY core/database/scripts/postgresql/*.sql /go/src/github.com/documize/community/edition/static/scripts/postgresql/

|

||||

COPY core/database/scripts/sqlserver/*.sql /go/src/github.com/documize/community/edition/static/scripts/sqlserver/

|

||||

COPY domain/onboard/*.json /go/src/github.com/documize/community/edition/static/onboard/

|

||||

RUN env GODEBUG=tls13=1 go build -mod=vendor -o bin/documize-community ./edition/community.go

|

||||

|

||||

# build release image

|

||||

FROM alpine:3.16

|

||||

RUN apk add --no-cache ca-certificates

|

||||

COPY --from=builder /go/src/github.com/documize/community/bin/documize-community /documize

|

||||

EXPOSE 5001

|

||||

ENTRYPOINT [ "/documize" ]

|

||||

301

Gopkg.lock

generated

301

Gopkg.lock

generated

|

|

@ -1,301 +0,0 @@

|

|||

# This file is autogenerated, do not edit; changes may be undone by the next 'dep ensure'.

|

||||

|

||||

|

||||

[[projects]]

|

||||

digest = "1:606d068450c82b9ddaa21de992f73563754077f0f411235cdfe71d0903a268c3"

|

||||

name = "github.com/codegangsta/negroni"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "5dbbc83f748fc3ad38585842b0aedab546d0ea1e"

|

||||

version = "v0.3.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:217f778e19b8d206112c21d21a7cc72ca3cb493b67631680a2324bc50335d432"

|

||||

name = "github.com/dgrijalva/jwt-go"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "dbeaa9332f19a944acb5736b4456cfcc02140e29"

|

||||

version = "v3.1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:39c2113f3a89585666e6f973650cff186b2d06deb4aa202c88addb87b0a201db"

|

||||

name = "github.com/documize/blackfriday"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "cadec560ec52d93835bf2f15bd794700d3a2473b"

|

||||

version = "v2.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:04bfeb11ea882e0a0867828e54374c066a1368f8da53bb1bbc16a9886967303a"

|

||||

name = "github.com/documize/glick"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "a8ccbef88237fcafe9cef3c9aee7ad83d0e132f9"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:2405d7a1e936e015b07c1c88acccc30d7f2e917b1b5acea08d06d116b8657a5c"

|

||||

name = "github.com/documize/html-diff"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "f61c192c7796644259832ef705c49259797e7fff"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:0ae2e1b2d4cdff4834aa28ce2e33a7b6de91e10150e3647fe1b9fd63a51b39ce"

|

||||

name = "github.com/documize/slug"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "e9f42fa127660e552d0ad2b589868d403a9be7c6"

|

||||

version = "v1.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f4f6279cb37479954644babd8f8ef00584ff9fa63555d2c6718c1c3517170202"

|

||||

name = "github.com/elazarl/go-bindata-assetfs"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "30f82fa23fd844bd5bb1e5f216db87fd77b5eb43"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ca82a3b99694824c627573c2a76d0e49719b4a9c02d1d85a2ac91f1c1f52ab9b"

|

||||

name = "github.com/fatih/structs"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "a720dfa8df582c51dee1b36feabb906bde1588bd"

|

||||

version = "v1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:adea5a94903eb4384abef30f3d878dc9ff6b6b5b0722da25b82e5169216dfb61"

|

||||

name = "github.com/go-sql-driver/mysql"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "d523deb1b23d913de5bdada721a6071e71283618"

|

||||

version = "v1.4.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ffc060c551980d37ee9e428ef528ee2813137249ccebb0bfc412ef83071cac91"

|

||||

name = "github.com/golang/protobuf"

|

||||

packages = ["proto"]

|

||||

pruneopts = "UT"

|

||||

revision = "925541529c1fa6821df4e44ce2723319eb2be768"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:51bee9f1987dcdb9f9a1b4c20745d78f6bf6f5f14ad4e64ca883eb64df4c0045"

|

||||

name = "github.com/google/go-github"

|

||||

packages = ["github"]

|

||||

pruneopts = "UT"

|

||||

revision = "e48060a28fac52d0f1cb758bc8b87c07bac4a87d"

|

||||

version = "v15.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:a63cff6b5d8b95638bfe300385d93b2a6d9d687734b863da8e09dc834510a690"

|

||||

name = "github.com/google/go-querystring"

|

||||

packages = ["query"]

|

||||

pruneopts = "UT"

|

||||

revision = "53e6ce116135b80d037921a7fdd5138cf32d7a8a"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:160eabf7a69910fd74f29c692718bc2437c1c1c7d4c9dea9712357752a70e5df"

|

||||

name = "github.com/gorilla/context"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "1ea25387ff6f684839d82767c1733ff4d4d15d0a"

|

||||

version = "v1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:88aa9e326e2bd6045a46e00a922954b3e1a9ac5787109f49ac85366df370e1e5"

|

||||

name = "github.com/gorilla/mux"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "53c1911da2b537f792e7cafcb446b05ffe33b996"

|

||||

version = "v1.6.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:6c41d4f998a03b6604227ccad36edaed6126c397e5d78709ef4814a1145a6757"

|

||||

name = "github.com/jmoiron/sqlx"

|

||||

packages = [

|

||||

".",

|

||||

"reflectx",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "d161d7a76b5661016ad0b085869f77fd410f3e6a"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8ef506fc2bb9ced9b151dafa592d4046063d744c646c1bbe801982ce87e4bc24"

|

||||

name = "github.com/lib/pq"

|

||||

packages = [

|

||||

".",

|

||||

"oid",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "4ded0e9383f75c197b3a2aaa6d590ac52df6fd79"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:8213aea9ec57afac7c765f9127bb3a5677866e03c0d3815f236045f16d5bc468"

|

||||

name = "github.com/mb0/diff"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "d8d9a906c24d7b0ee77287e0463e5ca7f026032e"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:0e1e5f960c58fdc677212fcc70e55042a0084d367623e51afbdb568963832f5d"

|

||||

name = "github.com/nu7hatch/gouuid"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "179d4d0c4d8d407a32af483c2354df1d2c91e6c3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:40e195917a951a8bf867cd05de2a46aaf1806c50cf92eebf4c16f78cd196f747"

|

||||

name = "github.com/pkg/errors"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "645ef00459ed84a119197bfb8d8205042c6df63d"

|

||||

version = "v0.8.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e6a29574542c00bb18adb1bfbe629ff88c468c2af2e2e953d3e58eda07165086"

|

||||

name = "github.com/rainycape/unidecode"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "cb7f23ec59bec0d61b19c56cd88cee3d0cc1870c"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:def689e73e9252f6f7fe66834a76751a41b767e03daab299e607e7226c58a855"

|

||||

name = "github.com/shurcooL/sanitized_anchor_name"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "86672fcb3f950f35f2e675df2240550f2a50762f"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:821c90494c34add2aa5f7c3b894f55dd08741acbb390901663050449b777c39a"

|

||||

name = "github.com/trivago/tgo"

|

||||

packages = [

|

||||

"tcontainer",

|

||||

"treflect",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "e4d1ddd28c17dd89ed26327cf69fded22060671b"

|

||||

version = "v1.0.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:1ecf2a49df33be51e757d0033d5d51d5f784f35f68e5a38f797b2d3f03357d71"

|

||||

name = "golang.org/x/crypto"

|

||||

packages = [

|

||||

"bcrypt",

|

||||

"blowfish",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "650f4a345ab4e5b245a3034b110ebc7299e68186"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:ac7eaa5f1179480f517d32831225215cc20940152d66be29f3d5204ea15d425f"

|

||||

name = "golang.org/x/net"

|

||||

packages = [

|

||||

"context",

|

||||

"context/ctxhttp",

|

||||

"html",

|

||||

"html/atom",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "f5dfe339be1d06f81b22525fe34671ee7d2c8904"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:fe84cb4abe7f53047ac44cf10d917d707a718711e146c9239700e4c8cc94a891"

|

||||

name = "golang.org/x/oauth2"

|

||||

packages = [

|

||||

".",

|

||||

"internal",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "543e37812f10c46c622c9575afd7ad22f22a12ba"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f40806967647e80fc51b941a586afefea6058592692c0bbfb3be7ea6b2b2a82d"

|

||||

name = "google.golang.org/appengine"

|

||||

packages = [

|

||||

"cloudsql",

|

||||

"internal",

|

||||

"internal/base",

|

||||

"internal/datastore",

|

||||

"internal/log",

|

||||

"internal/remote_api",

|

||||

"internal/urlfetch",

|

||||

"urlfetch",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "150dc57a1b433e64154302bdc40b6bb8aefa313a"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "v3"

|

||||

digest = "1:7388652e2215a3f45d341d58766ed58317971030eb1cbd75f005f96ace8e9196"

|

||||

name = "gopkg.in/alexcesaro/quotedprintable.v3"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "2caba252f4dc53eaf6b553000885530023f54623"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ec2b97c119fc66f96b421f8798deb2f87cb4a5ee81cafeaf9b55420d035f8fea"

|

||||

name = "gopkg.in/andygrunwald/go-jira.v1"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "0298784c4606cdf01e99644da115863c052a737c"

|

||||

version = "v1.5.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:81e1c5cee195fca5de06e2540cb63eea727a850b7e5c213548e7f81521c97a57"

|

||||

name = "gopkg.in/asn1-ber.v1"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "379148ca0225df7a432012b8df0355c2a2063ac0"

|

||||

version = "v1.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:93aaeb913621a3a53aaa78592c00f46d63e3bb0ea76e2d9b07327b50959a5778"

|

||||

name = "gopkg.in/ldap.v2"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "bb7a9ca6e4fbc2129e3db588a34bc970ffe811a9"

|

||||

version = "v2.5.1"

|

||||

|

||||

[solve-meta]

|

||||

analyzer-name = "dep"

|

||||

analyzer-version = 1

|

||||

input-imports = [

|

||||

"github.com/codegangsta/negroni",

|

||||

"github.com/dgrijalva/jwt-go",

|

||||

"github.com/documize/blackfriday",

|

||||

"github.com/documize/glick",

|

||||

"github.com/documize/html-diff",

|

||||

"github.com/documize/slug",

|

||||

"github.com/elazarl/go-bindata-assetfs",

|

||||

"github.com/go-sql-driver/mysql",

|

||||

"github.com/google/go-github/github",

|

||||

"github.com/gorilla/mux",

|

||||

"github.com/jmoiron/sqlx",

|

||||

"github.com/lib/pq",

|

||||

"github.com/nu7hatch/gouuid",

|

||||

"github.com/pkg/errors",

|

||||

"golang.org/x/crypto/bcrypt",

|

||||

"golang.org/x/net/context",

|

||||

"golang.org/x/net/html",

|

||||

"golang.org/x/net/html/atom",

|

||||

"golang.org/x/oauth2",

|

||||

"gopkg.in/alexcesaro/quotedprintable.v3",

|

||||

"gopkg.in/andygrunwald/go-jira.v1",

|

||||

"gopkg.in/ldap.v2",

|

||||

]

|

||||

solver-name = "gps-cdcl"

|

||||

solver-version = 1

|

||||

98

Gopkg.toml

98

Gopkg.toml

|

|

@ -1,98 +0,0 @@

|

|||

# Gopkg.toml example

|

||||

#

|

||||

# Refer to https://github.com/golang/dep/blob/master/docs/Gopkg.toml.md

|

||||

# for detailed Gopkg.toml documentation.

|

||||

#

|

||||

# required = ["github.com/user/thing/cmd/thing"]

|

||||

# ignored = ["github.com/user/project/pkgX", "bitbucket.org/user/project/pkgA/pkgY"]

|

||||

#

|

||||

# [[constraint]]

|

||||

# name = "github.com/user/project"

|

||||

# version = "1.0.0"

|

||||

#

|

||||

# [[constraint]]

|

||||

# name = "github.com/user/project2"

|

||||

# branch = "dev"

|

||||

# source = "github.com/myfork/project2"

|

||||

#

|

||||

# [[override]]

|

||||

# name = "github.com/x/y"

|

||||

# version = "2.4.0"

|

||||

#

|

||||

# [prune]

|

||||

# non-go = false

|

||||

# go-tests = true

|

||||

# unused-packages = true

|

||||

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/codegangsta/negroni"

|

||||

version = "0.3.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/dgrijalva/jwt-go"

|

||||

version = "3.1.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/documize/blackfriday"

|

||||

version = "2.0.0"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/documize/glick"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/documize/html-diff"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/elazarl/go-bindata-assetfs"

|

||||

version = "1.0.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/go-sql-driver/mysql"

|

||||

version = "1.3.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/google/go-github"

|

||||

version = "15.0.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/gorilla/mux"

|

||||

version = "1.6.1"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/jmoiron/sqlx"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/nu7hatch/gouuid"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/pkg/errors"

|

||||

version = "0.8.0"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "golang.org/x/crypto"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "golang.org/x/net"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "golang.org/x/oauth2"

|

||||

|

||||

[prune]

|

||||

go-tests = true

|

||||

unused-packages = true

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/documize/slug"

|

||||

version = "1.1.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "gopkg.in/andygrunwald/go-jira.v1"

|

||||

version = "1.5.0"

|

||||

2572

NOTICES.md

Normal file

2572

NOTICES.md

Normal file

File diff suppressed because it is too large

Load diff

143

README.md

143

README.md

|

|

@ -1,135 +1,110 @@

|

|||

> We provide frequent product releases ensuring self-host customers enjoy the same features as our cloud/SaaS customers.

|

||||

>

|

||||

> Harvey Kandola, CEO & Founder, Documize Inc.

|

||||

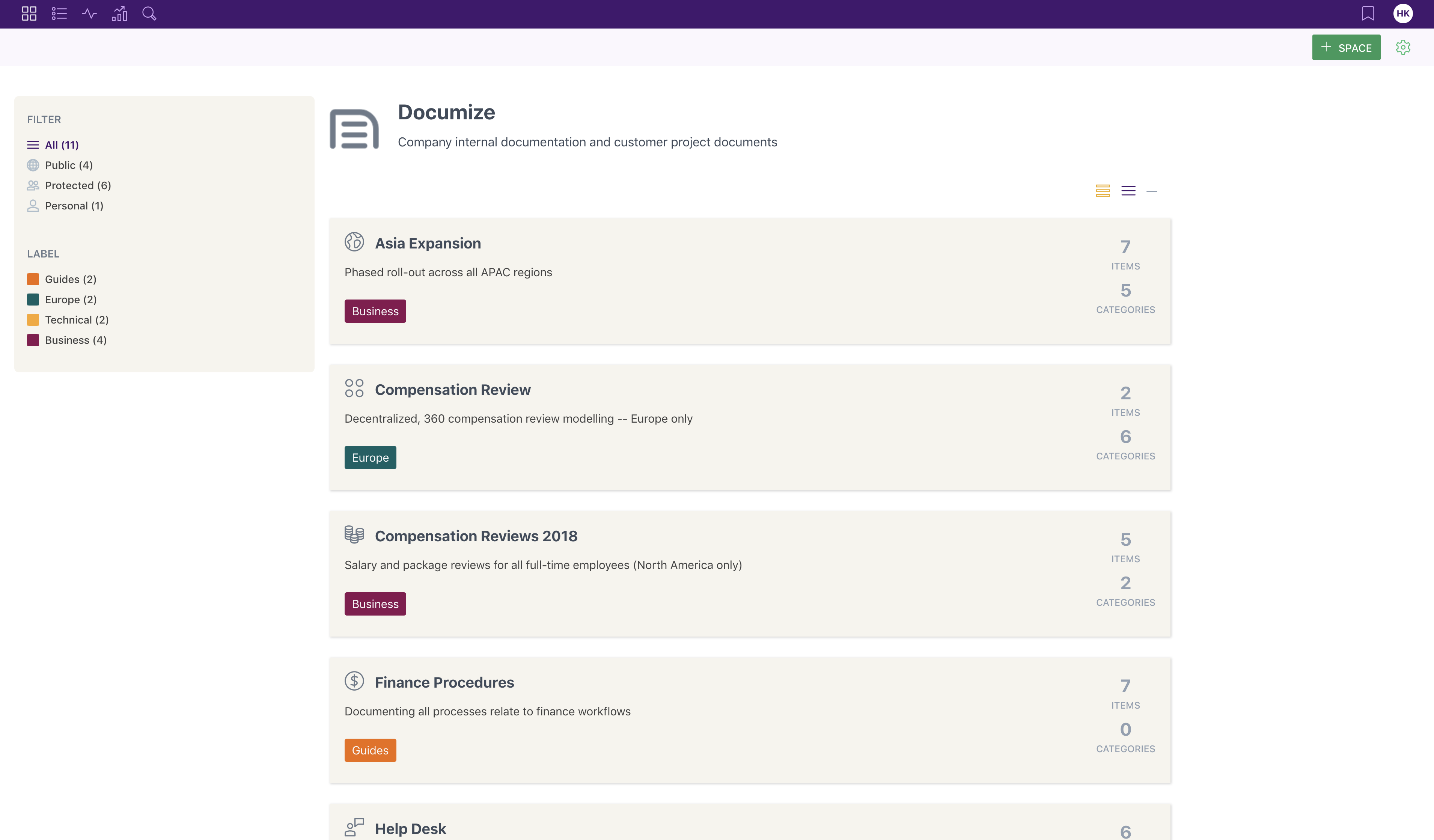

Documize Community is an open source, modern, self-hosted, enterprise-grade knowledge management solution.

|

||||

|

||||

## The Mission

|

||||

- Built for technical and non-technical users

|

||||

- Designed to unify both customer-facing and internal documentation

|

||||

- Organization through labels, spaces and categories

|

||||

|

||||

To bring software development inspired features to the world of documenting -- refactoring, importing, testing, linting, metrics, PRs, versioning....

|

||||

It's built with Golang + EmberJS and compiled down to a single executable binary that is available for Linux, Windows and Mac.

|

||||

|

||||

## What is it?

|

||||

All you need to provide is your database -- PostgreSQL, Microsoft SQL Server or any MySQL variant.

|

||||

|

||||

Documize is an intelligent document environment (IDE) for authoring, tracking and delivering documentation -- everything you need in one place.

|

||||

|

||||

## Why should I care?

|

||||

|

||||

Because maybe like us you're tired of:

|

||||

|

||||

* juggling WYSIWYG editors, wiki software and other document related solutions

|

||||

* playing email tennis with documents, contributions, versions and feedback

|

||||

* sharing not-so-secure folders with external participants

|

||||

|

||||

Sound familiar? Read on.

|

||||

|

||||

## Who is it for?

|

||||

|

||||

Anyone who wants a single place for any kind of document.

|

||||

|

||||

Anyone who wants to loop in external participants with complete security.

|

||||

|

||||

Anyone who wishes documentation and knowledge capture worked like agile software development.

|

||||

|

||||

Anyone who knows that nested folders fail miserably.

|

||||

|

||||

Anyone who wants to move on from wiki software.

|

||||

|

||||

## What's different about Documize?

|

||||

|

||||

Sane organization through personal, team and public spaces.

|

||||

|

||||

Granular document access control via categories.

|

||||

|

||||

Section based approach to document construction.

|

||||

|

||||

Reusable templates and content blocks.

|

||||

|

||||

Documentation related tasking and delegation.

|

||||

|

||||

Integrations for embedding SaaS data within documents, zero add-on/marketplace fees.

|

||||

|

||||

## What does it look like?

|

||||

|

||||

|

||||

|

||||

[See it live](https://docs.documize.com)

|

||||

|

||||

|

||||

## Latest Release

|

||||

|

||||

[Community Edition: v2.0.4](https://github.com/documize/community/releases)

|

||||

[Community edition: v5.13.0](https://github.com/documize/community/releases)

|

||||

|

||||

[Enterprise Edition: v2.0.4](https://documize.com/downloads)

|

||||

[Community+ edition: v5.13.0](https://www.documize.com/community/get-started)

|

||||

|

||||

## OS support

|

||||

The Community+ edition is the "enterprise" offering with advanced capabilities and customer support:

|

||||

|

||||

Documize can be installed and run on:

|

||||

- content approval workflows

|

||||

- content organization by label, space and category

|

||||

- content version management

|

||||

- content lifecycle management

|

||||

- content feedback capture

|

||||

- content PDF export

|

||||

- analytics and reporting

|

||||

- activity streams

|

||||

- audit logs

|

||||

- actions assignments

|

||||

- product support

|

||||

|

||||

The Community+ edition is [free](https://www.documize.com/community/get-started) for the first five users -- thereafter pricing starts at just $900 annually for 100 users.

|

||||

|

||||

## OS Support

|

||||

|

||||

- Linux

|

||||

- Windows

|

||||

- macOS

|

||||

- Raspberry Pi (ARM build)

|

||||

|

||||

Heck, Documize will probably run just fine on a Raspberry Pi 3.

|

||||

Support for AMD and ARM 64 bit architectures.

|

||||

|

||||

## Database Support

|

||||

|

||||

Documize supports the following database systems:

|

||||

For all database types, Full-Text Search (FTS) support is mandatory.

|

||||

|

||||

- PostgreSQL (v9.6+)

|

||||

- Microsoft SQL Server (2016+ with FTS)

|

||||

- Microsoft SQL Azure (v12+)

|

||||

- MySQL (v5.7.10+ and v8.0.12+)

|

||||

- Percona (v5.7.16-10+)

|

||||

- MariaDB (10.3.0+)

|

||||

|

||||

Coming soon: Microsoft SQL Server 2017 (Linux/Windows).

|

||||

|

||||

## Browser Support

|

||||

|

||||

Documize supports the following (evergreen) browsers:

|

||||

|

||||

- Chrome

|

||||

- Firefox

|

||||

- Chrome

|

||||

- Safari

|

||||

- Microsoft Edge

|

||||

- Brave

|

||||

- Vivaldi

|

||||

- Opera

|

||||

- Microsoft Edge (v42+)

|

||||

|

||||

## Technology Stack

|

||||

|

||||

Documize is built with the following:

|

||||

|

||||

- Ember JS (v3.7.2)

|

||||

- Go (v1.11.5)

|

||||

- Go (v1.23.4)

|

||||

- Ember JS (v3.12.0)

|

||||

|

||||

## Authentication Options

|

||||

|

||||

Besides email/password login, you can also leverage the following options.

|

||||

Besides email/password login, you can also authenticate via:

|

||||

|

||||

### LDAP / Active Directory

|

||||

* LDAP

|

||||

* Active Directory

|

||||

* Red Hat Keycloak

|

||||

* Central Authentication Service (CAS)

|

||||

|

||||

Connect and sync Documize with any LDAP v3 compliant provider including Microsoft Active Directory.

|

||||

When using LDAP/Active Directory, you can enable dual-authentication with email/password.

|

||||

|

||||

### Keycloak Integration

|

||||

## Localization

|

||||

|

||||

Documize provides out-of-the-box integration with [Redhat Keycloak](http://www.keycloak.org) for open source identity and access management.

|

||||

Languages supported out-of-the-box:

|

||||

|

||||

Connect and authenticate with LDAP, Active Directory or leverage Social Login.

|

||||

- English

|

||||

- German

|

||||

- French

|

||||

- Chinese (中文)

|

||||

- Portuguese (Brazil) (Português - Brasil)

|

||||

- Japanese (日本語)

|

||||

- Italian

|

||||

- Spanish Argentinian

|

||||

|

||||

<https://docs.documize.com>

|

||||

PR's welcome for additional languages.

|

||||

|

||||

### Auth0 Compatible

|

||||

## Product/Technical Support

|

||||

|

||||

Documize is compatible with Auth0 identity as a service.

|

||||

For both Community and Community+ editions, please contact our help desk for product help, suggestions and other enquiries.

|

||||

|

||||

[](https://auth0.com/?utm_source=oss&utm_medium=gp&utm_campaign=oss)

|

||||

<support@documize.com>

|

||||

|

||||

Open Source Identity and Access Management

|

||||

|

||||

## Developer's Note

|

||||

|

||||

We try to follow sound advice when writing commit messages:

|

||||

|

||||

https://chris.beams.io/posts/git-commit/

|

||||

We aim to respond within two working days.

|

||||

|

||||

## The Legal Bit

|

||||

|

||||

<https://documize.com>

|

||||

<https://www.documize.com>

|

||||

|

||||

This software (Documize Community Edition) is licensed under GNU AGPL v3 <http://www.gnu.org/licenses/agpl-3.0.en.html>. You can operate outside the AGPL restrictions by purchasing Documize Enterprise Edition and obtaining a commercial license by contacting <sales@documize.com>. Documize® is a registered trade mark of Documize Inc.

|

||||

This software (Documize Community edition) is licensed under GNU AGPL v3 <http://www.gnu.org/licenses/agpl-3.0.en.html>.

|

||||

|

||||

Documize Community uses other open source components and we acknowledge them in [NOTICES](NOTICES.md)

|

||||

|

|

|

|||

79

build.bat

79

build.bat

|

|

@ -7,56 +7,61 @@ echo "Building Ember assets..."

|

|||

cd gui

|

||||

call ember b -o dist-prod/ --environment=production

|

||||

::Call allows the rest of the file to run

|

||||

|

||||

echo "Copying Ember assets..."

|

||||

cd ..

|

||||

|

||||

rd /s /q embed\bindata\public

|

||||

mkdir embed\bindata\public

|

||||

rd /s /q edition\static\public

|

||||

mkdir edition\static\public

|

||||

echo "Copying Ember assets folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\assets embed\bindata\public\assets

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\assets edition\static\public\assets

|

||||

echo "Copying Ember codemirror folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\codemirror embed\bindata\public\codemirror

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\codemirror edition\static\public\codemirror

|

||||

echo "Copying Ember prism folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\prism embed\bindata\public\prism

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\prism edition\static\public\prism

|

||||

echo "Copying Ember tinymce folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\tinymce embed\bindata\public\tinymce

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\tinymce edition\static\public\tinymce

|

||||

echo "Copying Ember pdfjs folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\pdfjs edition\static\public\pdfjs

|

||||

echo "Copying Ember sections folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\sections embed\bindata\public\sections

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\sections edition\static\public\sections

|

||||

echo "Copying i18n folder"

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\i18n edition\static\public\i18n

|

||||

|

||||

copy gui\dist-prod\*.* embed\bindata

|

||||

copy gui\dist-prod\favicon.ico embed\bindata\public

|

||||

copy gui\dist-prod\manifest.json embed\bindata\public

|

||||

echo "Copying static files"

|

||||

copy gui\dist-prod\*.* edition\static

|

||||

|

||||

rd /s /q embed\bindata\mail

|

||||

mkdir embed\bindata\mail

|

||||

copy domain\mail\*.html embed\bindata\mail

|

||||

copy core\database\templates\*.html embed\bindata

|

||||

echo "Copying favicon.ico"

|

||||

copy gui\dist-prod\favicon.ico edition\static\public

|

||||

|

||||

rd /s /q embed\bindata\scripts

|

||||

mkdir embed\bindata\scripts

|

||||

mkdir embed\bindata\scripts\mysql

|

||||

mkdir embed\bindata\scripts\postgresql

|

||||

echo "Copying manifest.json"

|

||||

copy gui\dist-prod\manifest.json edition\static\public

|

||||

|

||||

echo "Copying mail templates"

|

||||

rd /s /q edition\static\mail

|

||||

mkdir edition\static\mail

|

||||

copy domain\mail\*.html edition\static\mail

|

||||

|

||||

echo "Copying database templates"

|

||||

copy core\database\templates\*.html edition\static

|

||||

|

||||

rd /s /q edition\static\i18n

|

||||

mkdir edition\static\i18n

|

||||

robocopy /e /NFL /NDL /NJH gui\dist-prod\i18n edition\static\i18n *.json

|

||||

|

||||

rd /s /q edition\static\scripts

|

||||

mkdir edition\static\scripts

|

||||

mkdir edition\static\scripts\mysql

|

||||

mkdir edition\static\scripts\postgresql

|

||||

mkdir edition\static\scripts\sqlserver

|

||||

|

||||

echo "Copying database scripts folder"

|

||||

robocopy /e /NFL /NDL /NJH core\database\scripts\mysql embed\bindata\scripts\mysql

|

||||

robocopy /e /NFL /NDL /NJH core\database\scripts\postgresql embed\bindata\scripts\postgresql

|

||||

robocopy /e /NFL /NDL /NJH core\database\scripts\mysql edition\static\scripts\mysql

|

||||

robocopy /e /NFL /NDL /NJH core\database\scripts\postgresql edition\static\scripts\postgresql

|

||||

robocopy /e /NFL /NDL /NJH core\database\scripts\sqlserver edition\static\scripts\sqlserver

|

||||

|

||||

echo "Generating in-memory static assets..."

|

||||

go get -u github.com/jteeuwen/go-bindata/...

|

||||

go get -u github.com/elazarl/go-bindata-assetfs/...

|

||||

cd embed

|

||||

go generate

|

||||

cd ..

|

||||

rd /s /q edition\static\onboard

|

||||

mkdir edition\static\onboard

|

||||

robocopy /e /NFL /NDL /NJH domain\onboard edition\static\onboard *.json

|

||||

|

||||

echo "Compiling Windows"

|

||||

set GOOS=windows

|

||||

go build -gcflags=-trimpath=%GOPATH% -asmflags=-trimpath=%GOPATH% -o bin/documize-community-windows-amd64.exe edition/community.go

|

||||

|

||||

echo "Compiling Linux"

|

||||

set GOOS=linux

|

||||

go build -gcflags=-trimpath=%GOPATH% -asmflags=-trimpath=%GOPATH% -o bin/documize-community-linux-amd64 edition/community.go

|

||||

|

||||

echo "Compiling Darwin"

|

||||

set GOOS=darwin

|

||||

go build -gcflags=-trimpath=%GOPATH% -asmflags=-trimpath=%GOPATH% -o bin/documize-community-darwin-amd64 edition/community.go

|

||||

go build -mod=vendor -trimpath -gcflags="all=-trimpath=$GOPATH" -o bin/documize-community-windows-amd64.exe edition/community.go

|

||||

|

|

|

|||

87

build.sh

87

build.sh

|

|

@ -8,56 +8,65 @@ echo "Build process started $NOW"

|

|||

|

||||

echo "Building Ember assets..."

|

||||

cd gui

|

||||

# export NODE_OPTIONS=--openssl-legacy-provider

|

||||

ember build ---environment=production --output-path dist-prod --suppress-sizes true

|

||||

cd ..

|

||||

|

||||

echo "Copying Ember assets..."

|

||||

rm -rf embed/bindata/public

|

||||

mkdir -p embed/bindata/public

|

||||

cp -r gui/dist-prod/assets embed/bindata/public

|

||||

cp -r gui/dist-prod/codemirror embed/bindata/public/codemirror

|

||||

cp -r gui/dist-prod/prism embed/bindata/public/prism

|

||||

cp -r gui/dist-prod/sections embed/bindata/public/sections

|

||||

cp -r gui/dist-prod/tinymce embed/bindata/public/tinymce

|

||||

cp gui/dist-prod/*.* embed/bindata

|

||||

cp gui/dist-prod/favicon.ico embed/bindata/public

|

||||

cp gui/dist-prod/manifest.json embed/bindata/public

|

||||

rm -rf edition/static/public

|

||||

mkdir -p edition/static/public

|

||||

cp -r gui/dist-prod/assets edition/static/public

|

||||

cp -r gui/dist-prod/codemirror edition/static/public/codemirror

|

||||

cp -r gui/dist-prod/prism edition/static/public/prism

|

||||

cp -r gui/dist-prod/sections edition/static/public/sections

|

||||

cp -r gui/dist-prod/tinymce edition/static/public/tinymce

|

||||

cp -r gui/dist-prod/pdfjs edition/static/public/pdfjs

|

||||

cp -r gui/dist-prod/i18n edition/static/public/i18n

|

||||

cp gui/dist-prod/*.* edition/static

|

||||

cp gui/dist-prod/favicon.ico edition/static/public

|

||||

cp gui/dist-prod/manifest.json edition/static/public

|

||||

|

||||

rm -rf embed/bindata/mail

|

||||

mkdir -p embed/bindata/mail

|

||||

cp domain/mail/*.html embed/bindata/mail

|

||||

cp core/database/templates/*.html embed/bindata

|

||||

rm -rf edition/static/mail

|

||||

mkdir -p edition/static/mail

|

||||

cp domain/mail/*.html edition/static/mail

|

||||

cp core/database/templates/*.html edition/static

|

||||

|

||||

rm -rf embed/bindata/scripts

|

||||

mkdir -p embed/bindata/scripts

|

||||

mkdir -p embed/bindata/scripts/mysql

|

||||

mkdir -p embed/bindata/scripts/postgresql

|

||||

cp -r core/database/scripts/mysql/*.sql embed/bindata/scripts/mysql

|

||||

cp -r core/database/scripts/postgresql/*.sql embed/bindata/scripts/postgresql

|

||||

rm -rf edition/static/i18n

|

||||

mkdir -p edition/static/i18n

|

||||

cp -r gui/dist-prod/i18n/*.json edition/static/i18n

|

||||

|

||||

echo "Generating in-memory static assets..."

|

||||

# go get -u github.com/jteeuwen/go-bindata/...

|

||||

# go get -u github.com/elazarl/go-bindata-assetfs/...

|

||||

cd embed

|

||||

go generate

|

||||

rm -rf edition/static/scripts

|

||||

mkdir -p edition/static/scripts

|

||||

mkdir -p edition/static/scripts/mysql

|

||||

mkdir -p edition/static/scripts/postgresql

|

||||

mkdir -p edition/static/scripts/sqlserver

|

||||

cp -r core/database/scripts/mysql/*.sql edition/static/scripts/mysql

|

||||

cp -r core/database/scripts/postgresql/*.sql edition/static/scripts/postgresql

|

||||

cp -r core/database/scripts/sqlserver/*.sql edition/static/scripts/sqlserver

|

||||

|

||||

echo "Compiling app..."

|

||||

cd ..

|

||||

for arch in amd64 ; do

|

||||

for os in darwin linux windows ; do

|

||||

if [ "$os" == "windows" ] ; then

|

||||

echo "Compiling documize-community-$os-$arch.exe"

|

||||

env GOOS=$os GOARCH=$arch go build -gcflags="all=-trimpath=$GOPATH" -o bin/documize-community-$os-$arch.exe ./edition/community.go

|

||||

else

|

||||

echo "Compiling documize-community-$os-$arch"

|

||||

env GOOS=$os GOARCH=$arch go build -gcflags="all=-trimpath=$GOPATH" -o bin/documize-community-$os-$arch ./edition/community.go

|

||||

fi

|

||||

done

|

||||

done

|

||||

rm -rf edition/static/onboard

|

||||

mkdir -p edition/static/onboard

|

||||

cp -r domain/onboard/*.json edition/static/onboard

|

||||

|

||||

echo "Compiling for macOS Intel..."

|

||||

env GOOS=darwin GOARCH=amd64 go build -mod=vendor -trimpath -o bin/documize-community-darwin-amd64 ./edition/community.go

|

||||

echo "Compiling for macOS ARM..."

|

||||

env GOOS=darwin GOARCH=arm64 go build -mod=vendor -trimpath -o bin/documize-community-darwin-arm64 ./edition/community.go

|

||||

echo "Compiling for Windows AMD..."

|

||||

env GOOS=windows GOARCH=amd64 go build -mod=vendor -trimpath -o bin/documize-community-windows-amd64.exe ./edition/community.go

|

||||

echo "Compiling for Linux AMD..."

|

||||

env GOOS=linux GOARCH=amd64 go build -mod=vendor -trimpath -o bin/documize-community-linux-amd64 ./edition/community.go

|

||||

echo "Compiling for Linux ARM..."

|

||||

env GOOS=linux GOARCH=arm go build -mod=vendor -trimpath -o bin/documize-community-linux-arm ./edition/community.go

|

||||

echo "Compiling for Linux ARM64..."

|

||||

env GOOS=linux GOARCH=arm64 go build -mod=vendor -trimpath -o bin/documize-community-linux-arm64 ./edition/community.go

|

||||

echo "Compiling for FreeBSD ARM64..."

|

||||

env GOOS=freebsd GOARCH=arm64 go build -mod=vendor -trimpath -o bin/documize-community-freebsd-arm64 ./edition/community.go

|

||||

echo "Compiling for FreeBSD AMD64..."

|

||||

env GOOS=freebsd GOARCH=amd64 go build -mod=vendor -trimpath -o bin/documize-community-freebsd-amd64 ./edition/community.go

|

||||

|

||||

echo "Finished."

|

||||

|

||||

|

||||

# CGO_ENABLED=0 GOOS=linux go build -a -ldflags="-s -w" -installsuffix cgo

|

||||

# go build -ldflags '-d -s -w' -a -tags netgo -installsuffix netgo test.go

|

||||

# ldd test

|

||||

|

|

|

|||

23

compile.sh

23

compile.sh

|

|

@ -1,23 +0,0 @@

|

|||

#! /bin/bash

|

||||

|

||||

echo "Generating in-memory static assets..."

|

||||

# go get -u github.com/jteeuwen/go-bindata/...

|

||||

# go get -u github.com/elazarl/go-bindata-assetfs/...

|

||||

cd embed

|

||||

go generate

|

||||

|

||||

echo "Compiling app..."

|

||||

cd ..

|

||||

for arch in amd64 ; do

|

||||

for os in darwin linux windows ; do

|

||||

if [ "$os" == "windows" ] ; then

|

||||

echo "Compiling documize-community-$os-$arch.exe"

|

||||

env GOOS=$os GOARCH=$arch go build -gcflags=-trimpath=$GOPATH -asmflags=-trimpath=$GOPATH -o bin/documize-community-$os-$arch.exe ./edition/community.go

|

||||

else

|

||||

echo "Compiling documize-community-$os-$arch"

|

||||

env GOOS=$os GOARCH=$arch go build -gcflags=-trimpath=$GOPATH -asmflags=-trimpath=$GOPATH -o bin/documize-community-$os-$arch ./edition/community.go

|

||||

fi

|

||||

done

|

||||

done

|

||||

|

||||

echo "Finished."

|

||||

|

|

@ -19,8 +19,8 @@ import (

|

|||

"net/http"

|

||||

"path/filepath"

|

||||

|

||||

"context"

|

||||

api "github.com/documize/community/core/convapi"

|

||||

"golang.org/x/net/context"

|

||||

)

|

||||

|

||||

// Msword type provides a peg to hang the Convert method on.

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@ import (

|

|||

"github.com/documize/community/core/api/plugins"

|

||||

api "github.com/documize/community/core/convapi"

|

||||

|

||||

"golang.org/x/net/context"

|

||||

"context"

|

||||

)

|

||||

|

||||

// Convert provides the entry-point into the document conversion process.

|

||||

|

|

|

|||

|

|

@ -1,155 +0,0 @@

|

|||

// Copyright 2016 Documize Inc. <legal@documize.com>. All rights reserved.

|

||||

//

|

||||

// This software (Documize Community Edition) is licensed under

|

||||

// GNU AGPL v3 http://www.gnu.org/licenses/agpl-3.0.en.html

|

||||

//

|

||||

// You can operate outside the AGPL restrictions by purchasing

|

||||

// Documize Enterprise Edition and obtaining a commercial license

|

||||

// by contacting <sales@documize.com>.

|

||||

//

|

||||

// https://documize.com

|

||||

|

||||

package convert_test

|

||||

|

||||

import (

|

||||

"strings"

|

||||

"testing"

|

||||

|

||||